History and Importance of Backpropagation

Backpropagation originated in the 1960s and 1970s, with early work by Henry J. Kelley and further development by Paul Werbos in his 1974 PhD thesis. The method gained widespread recognition after David E. Rumelhart, Geoffrey E. Hinton, and Ronald J. Williams published their seminal 1986 paper, "Learning Representations by Back-Propagating Errors."

The 1986 paper demonstrated backpropagation's effectiveness in training neural networks by efficiently computing gradients to optimize weights. This made training complex neural networks feasible, marking a significant shift in artificial intelligence capabilities. Backpropagation allows neural networks to learn from large datasets by systematically adjusting weights and biases to minimize prediction errors.

The algorithm works by passing the error backward through the network, layer by layer, to update each weight. It begins by calculating the error at the output layer, which then propagates back through the network. The gradients obtained are used to fine-tune the weights, reducing overall error in subsequent predictions.

Key Applications of Backpropagation:

- Computer vision

- Natural language processing

- Advanced game playing

Backpropagation's adoption significantly increased the effectiveness of neural networks. The algorithm relies on gradient descent methods for optimization, enabling machines to perform tasks previously thought to be beyond computers' capabilities.

As a key component of many AI systems, backpropagation illustrates the significant advancement from theoretical exploration in the 1960s to its practical, widespread application today.

Mathematics Behind Backpropagation

Backpropagation involves a series of steps beginning with the forward pass. Inputs are fed into the neural network, propagating through various layers via weighted sums and activation functions to produce an output. This output is compared to the actual target using a cost function.

The cost function, often denoted as C, measures the discrepancy between the network's prediction and the actual target values. Common cost functions include:

- Mean Squared Error (MSE) for regression tasks

- Cross-Entropy for classification tasks

Gradient descent is used to minimize the cost function. It involves calculating the gradient of the cost function with respect to each weight in the network. Gradients are computed by propagating the error backwards, and weights are adjusted in the opposite direction of the gradient.

Mathematically, this process is based on the chain rule from calculus. For any weight w and cost function C, the derivative ∂C/∂w is expressed as a product of derivatives along the path from the output layer back to the specific weight w.

The error at each node, often denoted as δ, is derived using the chain rule. For the output layer, the error δL is computed as:

δL = ∂C/∂aL · σ'(zL)

For the error at a neuron in a hidden layer l, the formula propagates backward using:

δl = (wl+1T δl+1) · σ'(zl)

To update the weights, the gradients ∂C/∂w and ∂C/∂b are used:

∂C/∂wljk = a(l-1)k δlj∂C/∂blj = δlj

Backpropagation combines these steps to adjust each weight and bias incrementally, reducing the overall error with each iteration through the training data. This process is repeated for numerous epochs until the network achieves a satisfactory level of accuracy.

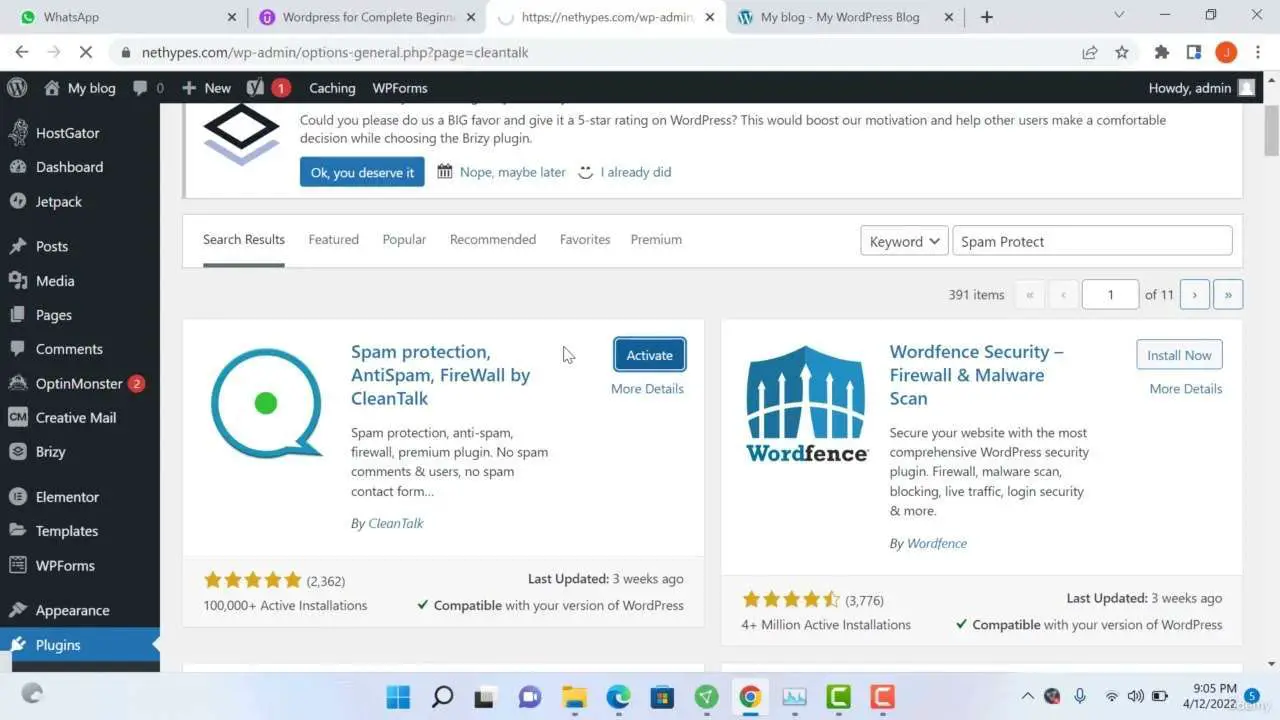

Practical Implementation of Backpropagation

Here's a practical implementation of backpropagation in Python using NumPy:

import numpy as np

class NeuralNetwork:

def __init__(self, layers):

self.num_layers = len(layers)

self.layers = layers

self.weights = []

self.biases = []

for i in range(self.num_layers - 1):

self.weights.append(np.random.randn(layers[i], layers[i+1]))

self.biases.append(np.random.randn(1, layers[i+1]))

def sigmoid(self, x):

return 1 / (1 + np.exp(-x))

def sigmoid_derivative(self, x):

return self.sigmoid(x) * (1 - self.sigmoid(x))

def feedforward(self, x):

self.a = [x]

self.z = []

for i in range(self.num_layers - 1):

z = np.dot(self.a[-1], self.weights[i]) + self.biases[i]

self.z.append(z)

a = self.sigmoid(z)

self.a.append(a)

return self.a[-1]

def backpropagation(self, x, y, learning_rate):

m = x.shape[0]

delta_w = [np.zeros(w.shape) for w in self.weights]

delta_b = [np.zeros(b.shape) for b in self.biases]

output = self.feedforward(x)

delta = (self.a[-1] - y) * self.sigmoid_derivative(self.z[-1])

delta_w[-1] = np.dot(self.a[-2].T, delta) / m

delta_b[-1] = np.sum(delta, axis=0, keepdims=True) / m

for l in range(2, self.num_layers):

z = self.z[-l]

sp = self.sigmoid_derivative(z)

delta = np.dot(delta, self.weights[-l+1].T) * sp

delta_w[-l] = np.dot(self.a[-l-1].T, delta) / m

delta_b[-l] = np.sum(delta, axis=0, keepdims=True) / m

for i in range(self.num_layers - 1):

self.weights[i] -= learning_rate * delta_w[i]

self.biases[i] -= learning_rate * delta_b[i]

def train(self, X, y, epochs, learning_rate):

for epoch in range(epochs):

self.backpropagation(X, y, learning_rate)

if epoch % 1000 == 0:

loss = np.mean(np.square(y - self.feedforward(X)))

print(f"Epoch {epoch}, Loss: {loss}")

# Sample data

X = np.array([[0, 0], [0, 1], [1, 0], [1, 1]])

y = np.array([[0], [1], [1], [0]])

# Create and train neural network

nn = NeuralNetwork([2, 4, 1])

nn.train(X, y, epochs=10000, learning_rate=0.1)

# Test the trained model

output = nn.feedforward(X)

print("Outputs after training:")

print(output)

This implementation demonstrates:

- Initialization of the neural network with random weights and biases.

- Forward pass through the network.

- Backward pass to compute gradients.

- Weight and bias updates to reduce loss.

The process is repeated over multiple epochs to improve the network's accuracy. This example serves as a foundation for understanding more complex neural networks and deep learning models.

"Backpropagation is the key algorithm that makes training deep models computationally tractable." – Ian Goodfellow, Deep Learning Pioneer1

Advantages and Challenges of Using Backpropagation

Backpropagation offers several key benefits for training neural networks:

- Efficiency: Allows gradient computation with just one forward and one backward pass through the network, reducing computational load.

- Simplicity: Applies the chain rule from calculus, enabling easy implementation even in complex architectures.

- Flexibility: Adapts to various network types, including convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

- Scalability: Efficiently handles increasing complexity as neural networks grow in size.

However, backpropagation faces challenges:

- Vanishing gradient problem: Gradients become extremely small, especially in deep networks, slowing or halting the learning process.

- Exploding gradient problem: Causes extreme updates to weights and biases.

- Computational intensity: Particularly for very deep networks, can be a barrier for those without access to powerful GPUs or specialized hardware.

Various techniques address these drawbacks:

- Modern optimization algorithms like Adam or RMSprop help mitigate the vanishing gradient problem.

- Architectural innovations such as Batch Normalization and Residual Networks (ResNets) tackle some limitations of traditional backpropagation, maintaining healthy gradient flows even in deeper networks.

Backpropagation in Modern Neural Networks

Backpropagation remains fundamental for training diverse and complex architectures in contemporary neural networks. It plays a crucial role in breakthrough technologies such as:

- Transformers: Essential for training the multi-head self-attention mechanism, allowing models to fine-tune millions of parameters for tasks like translation and summarization.

- Generative Adversarial Networks (GANs): Used to train both the generator and discriminator networks simultaneously, enabling the production of highly realistic data samples.

- Autoencoders: Relied upon to learn efficient data representations, facilitating tasks such as dimensionality reduction and denoising.

- Deep Reinforcement Learning: Integral to training decision-making agents, managing extensive parameter updates required for effective learning in complex architectures.

Backpropagation's ability to handle numerous parameters and efficiently compute gradients makes it indispensable for training sophisticated models. It continues to support scalability and accuracy in various AI applications, driving progress and setting the stage for future innovations.

Biologically Plausible Learning Rules: Equilibrium Propagation

Equilibrium propagation is an emerging solution to address the biological implausibility of backpropagation. It operates on energy minimization principles, using a single computation type for both learning phases, avoiding the need for specialized circuits.

The process involves two phases:

- Free phase: The network settles into a stable state when presented with input data.

- Weakly clamped phase: Outputs are nudged towards target values, causing network readjustment.

Mathematically, synaptic weight adjustment in equilibrium propagation is based on local energy function perturbations. The weight update rule can be expressed as:

ΔWij ∝ 1/β (ρ(uiβ) ρ(ujβ) - ρ(ui0) ρ(uj0))

Where β is the clamping factor, and ui0, uj0, uiβ, and ujβ represent neuron states during the free and weakly clamped phases.

A notable advantage of equilibrium propagation is that it doesn't require weight symmetry, addressing a major criticism of backpropagation's biological plausibility. By simplifying the learning mechanism and using energy-based methods, this framework offers a compelling alternative that could potentially lead to more efficient and resilient machine learning models.

Backpropagation remains vital for efficiently training neural networks, enabling complex models to learn from vast datasets and underscoring its lasting importance in artificial intelligence. As research continues, it's likely that new variations and improvements on backpropagation will emerge, further enhancing its effectiveness and addressing its current limitations.