Understanding Object Detection

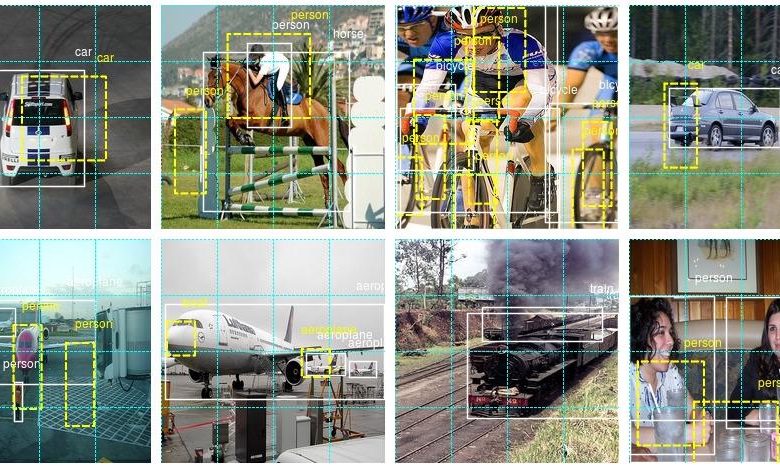

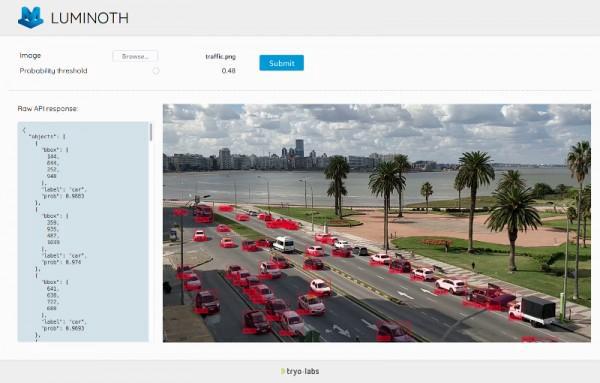

Object detection is a process of identifying and labeling objects within images or videos using models trained on vast datasets to recognize patterns and features. The model outputs the objects identified in the input image, marked with bounding boxes and labels. These boxes highlight the edges of the detected objects, whether cars, people, or animals.

Bounding boxes provide a clear-cut outline of the detected object, and the labels attached to these boxes indicate the type of object. For example, an image of a crowded street might have boxes around cars, pedestrians, and bicycles with respective labels, helping understand the placement and types of objects in the image.

Object detection differs from some related tasks in computer vision:

- Image classification involves predicting the class of one primary object in an image without providing the object's location.

- Object localization seeks to pinpoint the object's location without labeling multiple items in one go.

- Segmentation, often called semantic segmentation, groups pixels with similar properties to identify objects, unlike bounding boxes used in object detection.

Various models are used in object detection, each designed for specific tasks and typically trained with datasets like COCO (Common Objects in Context), which provide thousands of annotated images. Deep learning models for object detection rely on convolutional neural networks (CNNs), which mimic the structure of the human brain with input, hidden, and output layers. CNNs can learn and improve autonomously, reducing the need for manual engineering and resulting in better performance and faster detection.

There are two primary types of object detectors: one-stage and two-stage detectors. Two-stage detectors, like the region-based convolutional neural network (R-CNN), divide the detection task into region proposal and classification. R-CNN employs selective search to propose regions and applies CNN on each region to extract features. Fast R-CNN improved on this by sharing convolutional computations across proposals and introducing a region of interest (RoI) pooling layer. Faster R-CNN introduced a region proposal network (RPN), allowing end-to-end training and enhancing accuracy.

One-stage detectors like YOLO (You Only Look Once) and SSD (Single Shot Multibox Detector) simplify the detection pipeline. YOLO divides the image into a grid, predicting bounding boxes and class probabilities for each cell, allowing real-time detection. SSD detects objects in one pass, predicting multiple bounding boxes and associated class scores using feature maps from different network layers, helping detect objects of varying scales. RetinaNet introduced the focal loss function to address class imbalance, enhancing detection capabilities.

Transformers have recently emerged as influential tools in object detection, using self-attention mechanisms to establish global relationships between pixels. The vision transformer (ViT) splits images into patches and processes these patches using transformers, capturing complex patterns and resulting in high detection accuracy. Detection transformer (DETR) treats object detection as a direct set prediction problem, eliminating the need for handcrafted components like anchor boxes and using bipartite matching loss to ensure each ground truth object matches a predicted bounding box.

Evaluating object detection models involves metrics like intersection over union (IoU) and average precision (AP). IoU measures the overlap between predicted and actual bounding boxes, while AP calculates the area under the precision-recall curve for each class, with the mean of these values (mAP) indicating overall model performance.

Object detection finds applications in multiple domains, including traffic and surveillance systems, retail, autonomous vehicles, and healthcare.1-3 Understanding the nuances and basics of object detection offers insights into its significance in transforming various sectors, and with advancements in deep learning and the integration of innovative models, object detection continues to evolve, promising more accurate and efficient applications.

Core Challenges in Object Detection

Despite the advancements and improvements in object detection models, several core challenges impact the accuracy and efficiency of detection models and need to be addressed for broader and more robust application.

Variability in object appearance is a significant challenge. Objects in images can have varied shapes, colors, textures, and orientations, making it difficult for detection algorithms to generalize across different instances of the same object class, leading to potential inaccuracies or missed detections.

Scale variations also pose a serious problem. Objects can appear in numerous sizes within images, influenced by their distance from the camera. Traditional object detection models can struggle to maintain consistency in detection across varying scales. Approaches like multi-scale representations or pyramids in models like SSD or Faster R-CNN can address this issue but add computational costs and impact detection speed.

Occlusions further complicate object detection. Partially or predominantly obscured objects make it difficult for the model to identify and label them accurately, leading to incomplete detection. Advanced models are being trained with more occluded data to enhance robustness, but the challenge persists.

Background clutter is another crucial issue. In many real-world scenarios, the intricate and complex background makes it challenging to distinguish the object of interest from its surroundings, increasing the likelihood of false positives. Techniques like using context-aware models that consider spatial relationships between objects and their backgrounds can help, but this area requires continuous refinement.

These challenges collectively impact the efficacy of detection models. Variability in object appearance can result in false negatives, scale variations and occlusions can hinder performance in real-time applications, and background clutter can lead to higher computational costs and increased need for post-processing to filter out false positives.

Addressing these challenges requires a blend of innovative model architectures, comprehensive training with diverse datasets, and the integration of advanced techniques like transformers. Continuous improvements and updates to models are vital to enhance their robustness against these challenges. By understanding these core obstacles, researchers and engineers can develop more resilient and efficient object detection models, paving the way for wider and more effective applications across different industries.4-6

Traditional Approaches vs. Deep Learning

Traditional methods, like Histograms of Oriented Gradients (HOG), Scale-Invariant Feature Transform (SIFT), and Haar-like features, were designed to extract specific characteristics from images to identify objects. These features were then fed into classifiers like Support Vector Machines (SVM) or decision trees. HOG descriptors compute gradient orientation histograms to capture the shape and structure of objects, while SIFT identifies key points in an image and creates descriptors based on the local image gradients around these points. While effective in controlled environments, these approaches had inherent limitations.

One notable disadvantage of traditional methods is their dependency on the quality of hand-engineered features. Manually designing these features is time-consuming, requires expert knowledge, and may not generalize well across different datasets or applications, leading to poor performance in more complex or varied environments. Traditional methods often struggle with scale variations, occlusions, and background clutter.

The introduction of deep learning, especially convolutional neural networks (CNNs), revolutionized object detection. CNNs learn hierarchical features directly from data through multiple layers of convolutions, pooling, and fully connected layers, eliminating the need for manual feature engineering. One of the earliest deep learning models for object detection was the RCNN family, which brought significant improvements over traditional approaches. RCNN used CNNs to extract features from proposed regions and then classified these regions using SVM. Fast R-CNN and Faster R-CNN aimed to address efficiency issues, with the latter introducing region proposal networks (RPN) to avoid the computational bottleneck of selective search.

One-stage detectors like YOLO and SSD further simplified the object detection pipeline by predicting bounding boxes and class labels in a single pass, enabling real-time applications previously unthinkable with traditional methods. These end-to-end trainable models can optimize both feature extraction and classification simultaneously.

Deep learning approaches can handle a wide range of variations in object appearance, including changes in scale, occlusions, and background clutter, much more effectively than traditional methods. They benefit from large annotated datasets like COCO, allowing them to generalize better across different environments and applications. However, deep learning is not without its challenges and limitations. Training deep networks requires large amounts of labeled data and significant computational resources, making it less accessible for smaller organizations or individuals. Deep learning models can also be considered "black boxes," with their internal decision-making process being less interpretable compared to traditional methods, which can be a drawback in applications where understanding the basis of a decision is critical.

The transition from traditional hand-engineered feature methods to modern deep learning techniques represents a significant advancement in object detection. While traditional methods laid the groundwork and provided essential insights, deep learning has pushed the boundaries, offering superior accuracy, generalization, and real-time detection capabilities.7-10 As researchers continue to refine these models and address existing limitations, the future of object detection promises to be even more transformative and impactful.

Deep Learning Models for Object Detection

Several notable deep learning frameworks for object detection stand out, each with its unique architecture, strengths, and favored use cases.

Region-based Convolutional Neural Networks (R-CNN) employ a region proposal method, scanning the input image for potential objects by generating around 2000 region proposals using selective search. These regions are passed through a CNN to extract features, which are then classified using pre-trained SVMs. While R-CNN significantly improved detection accuracy, it suffered from computational inefficiency.

Fast R-CNN addressed this inefficiency by utilizing a single CNN to process the entire image, producing a convolutional feature map. Region proposals are extracted from these feature maps and fed through an RoI pooling layer, converting them into fixed-size feature vectors for simultaneous object classification and bounding box regression.

Faster R-CNN revolutionized object detection by incorporating the Region Proposal Network (RPN). The RPN, fully integrated within the CNN pipeline, efficiently generates region proposals by sharing the convolutional layers used for object detection, resulting in an end-to-end trainable model with improved speed and accuracy.

YOLO (You Only Look Once) prioritizes speed by framing object detection as a single regression problem. It divides the input image into a grid and predicts bounding boxes and class probabilities for each cell in one evaluation. This streamlined process allows YOLO to achieve real-time detection speeds, making it suitable for applications requiring instant analysis. However, it can occasionally compromise on detection accuracy, especially with smaller objects or closely packed scenes.

The SSD (Single Shot MultiBox Detector) enhances detection accuracy by utilizing multiple feature maps at different scales to detect objects of varying sizes more effectively. Each feature map layer predicts class scores and bounding boxes, facilitating rapid and efficient multi-scale detection. SSD is widely utilized in mobile and embedded applications where computational efficiency is critical.

Recently, transformer-based models like Detection Transformers (DETR) have introduced a novel approach to object detection. DETR leverages the self-attention mechanism inherent in transformers to predict a fixed set of object detections, treating the task as a direct set prediction problem. By utilizing a bipartite matching loss, DETR ensures that each ground truth object corresponds to a predicted bounding box, reducing complexity and improving detection efficacy.

Each of these models showcases varying strengths and preferred applications:

- R-CNN and its derivatives are lauded for their precision

- YOLO's real-time detection capabilities cater to scenarios demanding prompt decision-making

- SSD strikes a balance, finding favor in resource-constrained environments

- Transformer-based models like DETR, with their potent global feature extraction, are forging new paths in addressing persistent challenges in object detection

Training and Evaluating Object Detection Models

Training and evaluating object detection models involves several critical steps to ensure the robustness and accuracy of the model.

Data preparation is paramount, requiring high-quality annotated datasets like the COCO dataset1. This data must be diverse, representing various scenarios, conditions, and object appearances. Annotation involves marking objects with bounding boxes and corresponding labels, often requiring manual verification for accuracy.

Data augmentation techniques like rotation, scaling, flipping, and color adjustments are employed to artificially increase the dataset's size and diversity. This process improves the model's robustness by learning to recognize objects under different conditions, mitigating overfitting.

During training, the model undergoes fine-tuning with the prepared dataset. Modern training strategies employ advanced techniques like learning rate scheduling and regularization methods to enhance training efficiency and prevent overfitting.

Evaluating object detection models utilizes specific metrics, with intersection over union (IoU) and mean average precision (mAP) being the most prominent2. IoU measures the overlap between the predicted bounding box and the ground truth, while mAP provides a comprehensive overview of the model's detection performance across all classes.

These metrics are indispensable for quantitative evaluation, benchmarking, diagnosing potential issues, and guiding model refinement during hyperparameter tuning.

Training and evaluating object detection models is a multifaceted process involving thorough data preparation, strategic augmentation, and rigorous evaluation. The nuances of this process ensure that models generalize effectively to new, unseen data, pushing the boundaries of what these models can achieve in practical applications.

Applications and Use Cases

Object detection models have found applications in various domains, providing enhanced capabilities and improving efficiency.

In surveillance systems, advanced cameras equipped with these models can automatically detect and track suspicious activities, optimizing response times and improving public safety.

Autonomous driving relies on object detection algorithms to recognize various road elements, from vehicles and pedestrians to traffic signs and obstacles. By accurately identifying and reacting to surrounding objects, these systems ensure both safety and efficiency.

Healthcare has benefited from object detection in medical imaging, where deep learning models assist radiologists in identifying anomalies and early signs of diseases, enabling early intervention and improving patient outcomes.

Retail industry applications include:

- Inventory management, where automated systems scan shelves and stock levels, ensuring timely restocking

- Stores like Amazon Go employing these models to enable cashier-less shopping, providing a seamless checkout process

- Loss prevention, where intelligent cameras continuously monitor store activities to identify potential theft or fraud, reducing losses and enhancing overall store management

These real-world applications underscore the versatility and transformative power of object detection across various industries. As technology progresses, the precision and efficiency of these models will continue to shape and enhance numerous applications, making them indispensable tools in our modern world.

Understanding the intricacies of object detection reveals its pivotal role in advancing various fields. As technology progresses, the precision and efficiency of these models will continue to shape and enhance numerous applications, making them indispensable tools in our modern world.

Revolutionize your content with Writio, an AI writer that creates top-notch articles for your website. This post was written by Writio.