Introduction to Deep Learning in Computer Vision

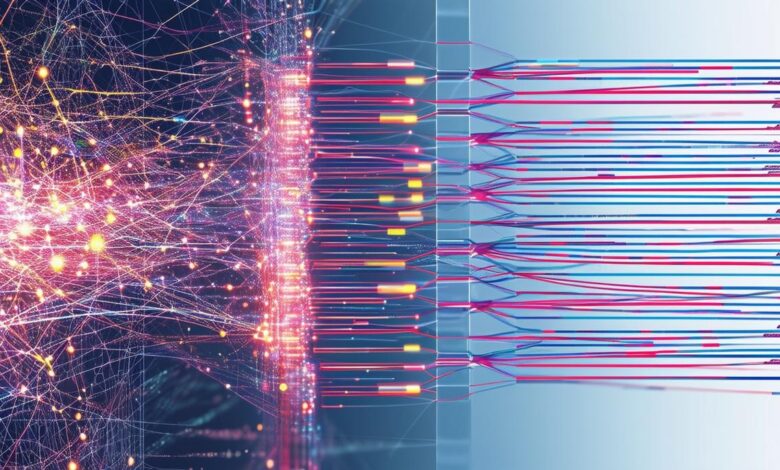

Deep learning has revolutionized image and video processing, transforming everything from basic tasks to complex systems. Convolutional Neural Networks (CNNs) excel by analyzing images layer by layer, identifying key features progressively. This approach has driven significant progress in fields such as autonomous vehicles and medical imaging.

The ResNet-50 model employs residual blocks to avoid the vanishing gradient problem, enabling deeper, more efficient networks. YOLO (You Only Look Once) prioritizes speed, processing images in a single step, making it ideal for real-time applications. Vision Transformers (ViTs) adapt natural language processing techniques, dividing images into patches and embedding positional information.

Stable Diffusion V2 innovates image generation with text-to-image models, integrating linguistic cues into visual data. Frameworks like PyTorch and Keras simplify model implementation, while libraries such as TensorFlow provide tools for efficient model building, training, and deployment.

Core Deep Learning Architectures for Computer Vision

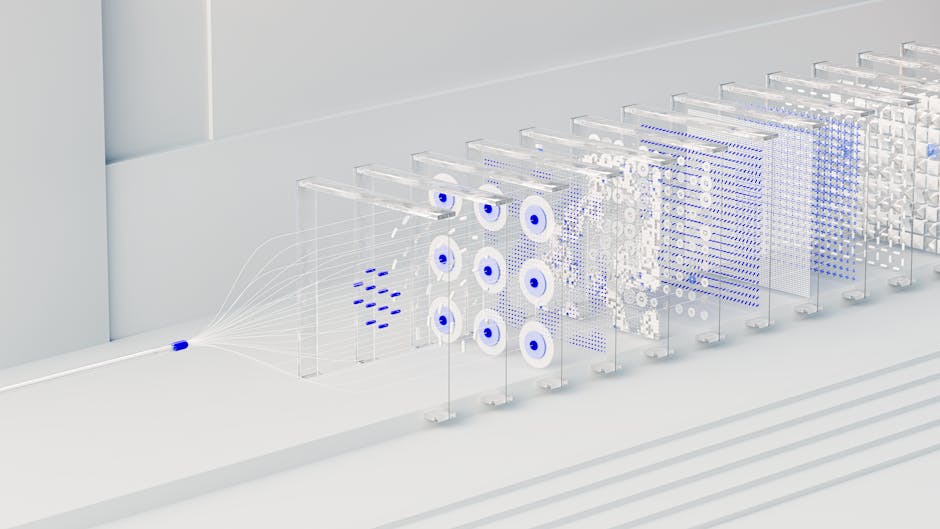

Convolutional Neural Networks (CNNs) form the foundation of deep learning in computer vision. They apply filters to input images, extracting features like edges or textures. Deeper layers capture more complex features, from shapes to entire objects.

Key components of CNNs:

- Convolutional layers

- Pooling layers

- Fully connected layers

ResNet (Residual Networks) is a notable CNN architecture. Its innovative residual blocks use skip connections to bypass layers, addressing the vanishing gradient issue in deep networks. This allows for deeper, more efficient networks capable of learning complex patterns without degradation.

YOLO (You Only Look Once) uses a single convolutional network to simultaneously predict bounding boxes and class probabilities. This end-to-end method processes entire images at once, making YOLO exceptionally fast and suitable for real-time applications.

Vision Transformers (ViTs) divide images into fixed-size patches, processing them as sequences with embedded positional information. Multi-head attention mechanisms allow ViTs to focus on different image parts simultaneously, providing a comprehensive understanding of visual content.

Stable Diffusion V2 introduces text-to-image models, transforming textual descriptions into high-quality images. Its super-resolution upscaling technology can also convert low-resolution images into detailed, high-resolution outputs.

Practical Applications of Deep Learning in Computer Vision

Autonomous vehicles rely on CNNs and other deep learning architectures to interpret their surroundings. These vehicles combine cameras, LIDAR, and radar to gather data, processed in real-time to identify objects, detect lane lines, and predict other road users' behavior.

In medical imaging, Convolutional Neural Networks can analyze X-rays, MRIs, and CT scans to detect anomalies like tumors or other pathological changes with high precision. Deep learning models are also used to predict the progression of diseases like Alzheimer's by analyzing brain scans.1

Surveillance systems have improved through deep learning advancements. Models like YOLO and ResNet can now monitor live feeds, detect suspicious activities, and identify individuals in crowds with improved reliability.

In augmented reality (AR), deep learning enhances user experiences by enabling more accurate integration of virtual objects with the real world. Vision Transformers and other deep learning models analyze and understand spatial environments, ensuring virtual elements behave realistically.

In robotics, deep learning-powered computer vision enables robots to perform intricate tasks such as:

- Picking and placing objects in manufacturing settings

- Assisting in surgeries

- Recognizing objects and their properties

- Adapting to environmental changes

- Performing actions with precision

Tools and Frameworks for Deep Learning in Computer Vision

TensorFlow, developed by Google, emphasizes deep learning capabilities for complex neural networks. Its automatic differentiation optimizes model performance and accelerates functional gradient computations. TensorFlow supports both CPUs and GPUs, enhancing its suitability for large-scale projects.

PyTorch, developed by Meta, provides a dynamic and flexible environment for rapid experimentation. Its dynamic computation graph enables real-time adjustments to network architecture, facilitating debugging and model iteration. PyTorch uses tensors for fast data processing, making it efficient for deep learning tasks.

Keras, now integrated with TensorFlow, streamlines the model-building process with a user-friendly API. It's particularly useful for rapid prototyping, abstracting the complexities of neural network creation.

Other notable tools and frameworks:

- Apache MXNet: Supports various languages and compiles to C++ for high-speed execution.

- Jax: Known for its speed and just-in-time compilation capabilities.

- Hugging Face Transformers: Offers an extensive repository of pre-trained models, datasets, and tools.

- ML.NET: Integrates with the .NET ecosystem and supports various machine learning tasks.

- Shogun: Efficiently handles large datasets and provides a collection of machine learning algorithms.

- Pandas: Crucial in preparing datasets for machine learning models, facilitating exploratory data analysis.

"The right tool can make all the difference in deep learning projects. Choose wisely based on your specific needs and expertise."

Challenges and Future Directions in Deep Learning for Computer Vision

Deep learning in computer vision faces significant challenges despite its advancements:

- High computational costs for training models require expensive hardware and raise environmental concerns

- Data scarcity, especially in fields like medical imaging, hinders model development

- Interpretability remains a critical issue, particularly for complex models used in sensitive applications

However, ongoing research offers promising solutions:

- Novel architectures like Vision Transformers show potential for handling complex tasks

- Advancements in hardware, such as AI accelerators and neuromorphic chips, may improve efficiency and accessibility

- Integration with edge computing could enable real-time processing for applications like autonomous vehicles

Emerging trends include:

- The convergence of deep learning with quantum computing and neuroscience, which could lead to more efficient and accurate visual systems

- Research into unsupervised and self-supervised learning, aiming to reduce reliance on labeled data

As the field progresses, it's likely that these challenges will be addressed through a combination of technological advancements, innovative approaches, and interdisciplinary collaborations.

In summary, deep learning has surpassed traditional computer vision limitations, enabling applications once considered science fiction. From object identification to image generation and human action prediction, deep learning continues to set new standards in the field.

Writio: Your AI content writer for top-quality articles. This page was crafted by Writio.