Naive Bayes Classifier: A Complete Guide to Probabilistic Machine Learning

Introduction

The Naive Bayes Classifier stands as one of machine learning’s most elegant yet powerful algorithms, combining probabilistic theory with practical efficiency. Despite its “naive” name, this classifier has proven remarkably effective in real-world applications, from spam detection to medical diagnosis.

What is Naive Bayes Classifier?

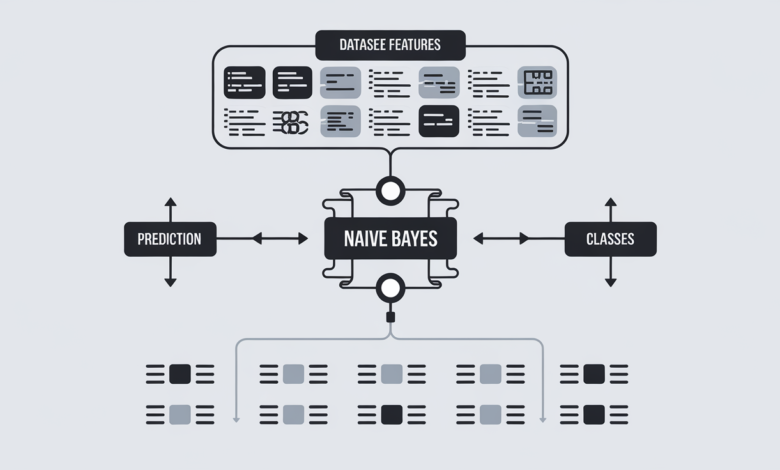

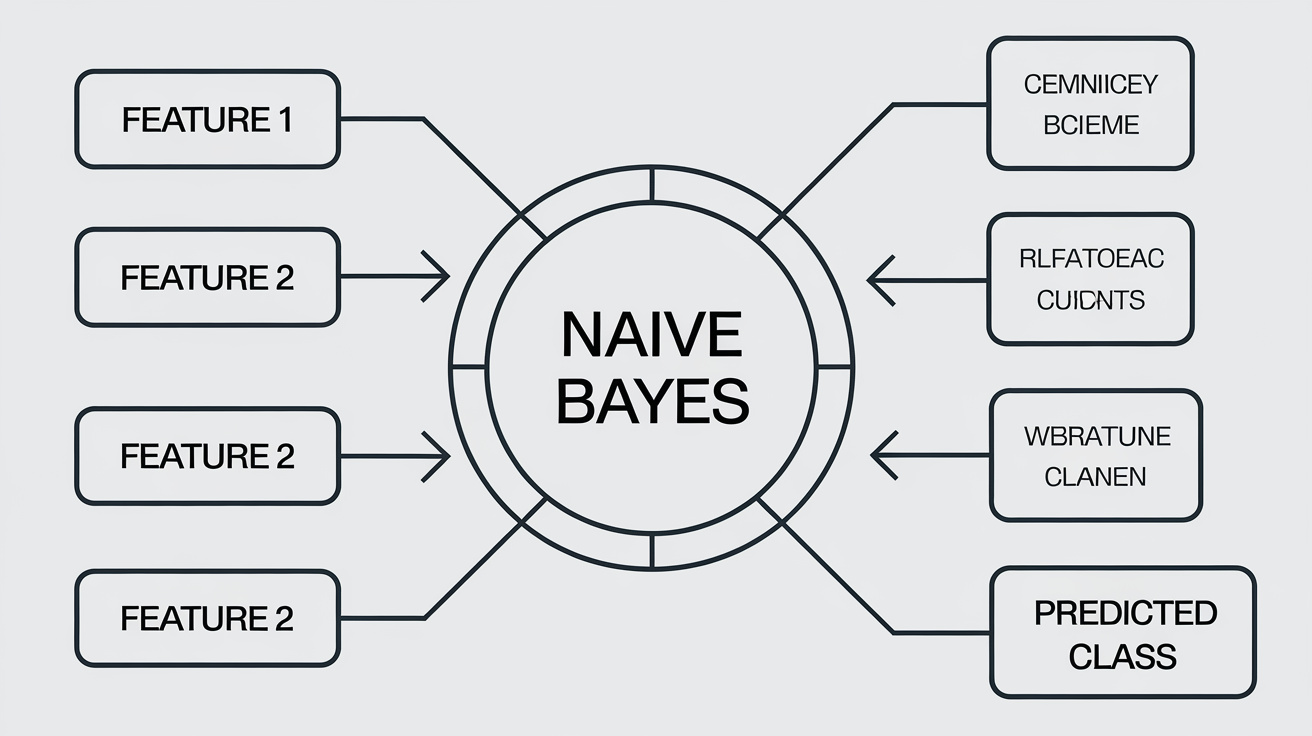

A Naive Bayes Classifier is a probabilistic machine learning model based on Bayesian Theorem, used for classification tasks.

Naive bayes algorithm is not only suitable for binary classification but also multi-class classification for more than two classes.

What makes it “naive” is its core assumption that all features in a dataset are independent of each other – a simplification that, surprisingly, often works extremely well in practice.

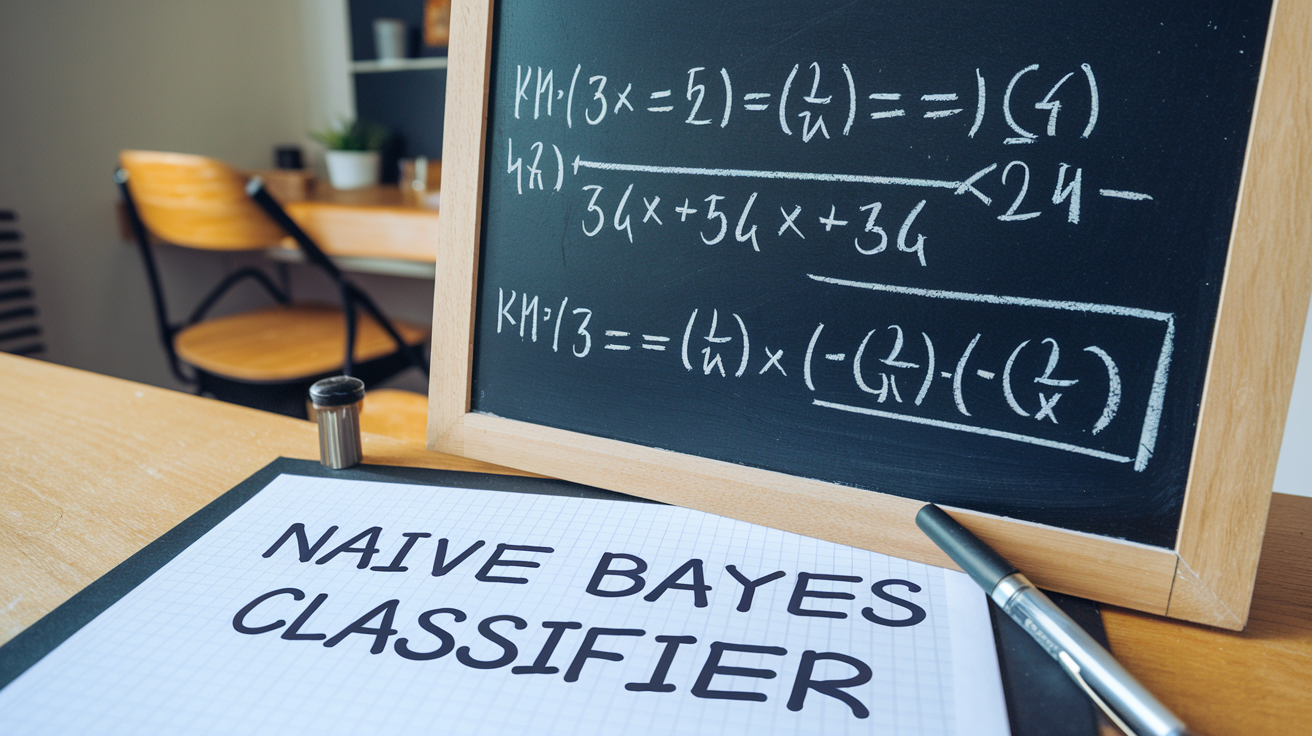

Understanding Bayes’ Theorem

At the heart of the Naive Bayes Classifier lies Bayes’ Theorem,

It uses conditional probabilities to perform it tasks, meaning predicting of an event occurring while another event has already occurred.

which can be expressed as:

P(A|B) = P(B|A) * P(A) / P(B)Where:

- P(A|B) is the posterior probability

- P(B|A) is the likelihood

- P(A) is the prior probability

- P(B) is the marginal probability

Problems such as Text Analysis are solved to highest accuracy using the Naive bayes algorithm. but the perform poorly when faced with regression problem, thus to why the are mainly used for classification tasks.

Types of Naive Bayes Classifiers

1. Gaussian Naive Bayes

Best suited for continuous data following a normal distribution. Here’s a simple implementation using scikit-learn:

from sklearn.naive_bayes import GaussianNB

from sklearn.model_selection import train_test_split

import numpy as np

# Create a simple classifier

gnb = GaussianNB()

# Example data

X = np.array([[1.0, 2.0], [2.0, 3.0], [3.0, 4.0], [4.0, 5.0]])

y = np.array([0, 0, 1, 1])

# Train the model

gnb.fit(X, y)

# Make predictions

predictions = gnb.predict([[2.5, 3.5]])2. Multinomial Naive Bayes

Ideal for discrete data like word counts in text classification:

from sklearn.naive_bayes import MultinomialNB

from sklearn.feature_extraction.text import CountVectorizer

# Create vectorizer and classifier

vectorizer = CountVectorizer()

mnb = MultinomialNB()

# Example text data

texts = ["This is good", "This is bad", "This is great"]

labels = [1, 0, 1]

# Transform text to vectors

X = vectorizer.fit_transform(texts)

# Train the model

mnb.fit(X, labels)3. Bernoulli Naive Bayes

Perfect for binary feature vectors:

from sklearn.naive_bayes import BernoulliNB

# Create classifier

bnb = BernoulliNB()

# Binary feature example

X = np.array([[0, 1], [1, 0], [1, 1]])

y = np.array([0, 1, 1])

# Train model

bnb.fit(X, y)Real-World Applications

Text Classification

- Spam email filtering

- Sentiment analysis

- Document categorization

Medical Diagnosis

- Disease prediction based on symptoms

- Patient risk assessment

Real-time Prediction

- Weather forecasting

- Market analysis

Advantages of Naive Bayes

Simple and Efficient

- Easy to implement

- Fast training and prediction

- Works well with high-dimensional data

Limited Data Handling

- Performs well even with small training datasets

- Can make predictions with incomplete data

Scalability

- Highly scalable for large datasets

- Parallel processing compatible

Limitations and Considerations

Independence Assumption

- Features are assumed to be independent

- May not reflect real-world relationships

Zero Frequency Problem

- Can be addressed using Laplace smoothing

- Requires careful handling of unseen data

Best Practices for Implementation

- Data Preprocessing

from sklearn.preprocessing import StandardScaler

# Standardize features

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)- Model Evaluation

from sklearn.metrics import accuracy_score, classification_report

# Evaluate model

y_pred = gnb.predict(X_test)

print(classification_report(y_test, y_pred))- Hyperparameter Tuning

from sklearn.model_selection import GridSearchCV

# Parameter grid

param_grid = {

'var_smoothing': np.logspace(0,-9, num=100)

}

# Grid search

grid_search = GridSearchCV(GaussianNB(), param_grid, cv=5)

grid_search.fit(X_train, y_train)Conclusion

The Naive Bayes Classifier remains a fundamental algorithm in machine learning, offering a perfect balance of simplicity and effectiveness. While its assumptions may be “naive,” its performance in real-world applications proves its worth as a reliable classification method.

In-case you have faced any difficult, please make a good use of the comment section i will personal be there to help you when you are stuck. also you can use the FAQs section below to understand more.

We think sharing practical implementation on real world example various machine learning skill is the key point to mastery and also solve various problem that affect our society we are intended to teach you through practical means if you think our idea is good. Please and please leave us a comment below about your views or request an article. as usual don’t forget to up-vote this article and share it.

FAQs

- Why is it called “Naive” Bayes?

- The name comes from the “naive” assumption of feature independence.

- When should I use Naive Bayes?

- Text classification tasks

- When features are relatively independent

- When quick training and prediction are needed

- How does it compare to other classifiers?

- Often simpler than alternatives

- Faster training than complex models

- Good performance with high-dimensional data

- What are the prerequisites for using Naive Bayes?

- Basic understanding of probability

- Knowledge of feature independence concepts

- Familiarity with data preprocessing

- Can Naive Bayes handle missing data?

- Yes, it can handle missing data relatively well

- Missing values can be ignored during calculation