Reinforcement Learning (RL) combines aspects of supervised and unsupervised learning, offering a distinct approach to machine learning through environmental interactions. By emphasizing interaction and feedback, RL establishes a framework for developing intelligent systems capable of decision-making and continuous improvement. This article explores the fundamental concepts, integration with deep learning, key algorithms, and practical applications of RL.

Introduction to Reinforcement Learning

Reinforcement Learning (RL) occupies a space between supervised and unsupervised learning. It learns through interaction, taking actions, observing results, and adjusting based on feedback.

Key concepts in RL include:

- Agent: The decision-maker of the system

- Environment: Responds to actions and changes states

- State: Represents the current situation

- Reward: Feedback provided by the environment

- Policy: Strategy for selecting actions based on the current state

Two main types of RL algorithms exist: model-based and model-free. Model-based algorithms predict outcomes by simulating the environment, while model-free algorithms learn directly from experience.

Markov Decision Process (MDP) formalizes sequential decision-making in RL, ensuring the current state and action define the next state and reward. Bellman equations provide numerical foundations for calculating potential rewards.

Dynamic Programming (DP) offers two tools: Value Iteration and Policy Iteration. These methods help refine optimal policies and identify the best actions.

Q-learning is a straightforward model-free RL algorithm that focuses on the value of taking specific actions in particular states. It pairs effectively with Neural Networks in deep reinforcement learning.

RL applications extend beyond games to robotics, autonomous vehicles, financial market trading, and healthcare. This range demonstrates RL's versatility and effectiveness in various fields.

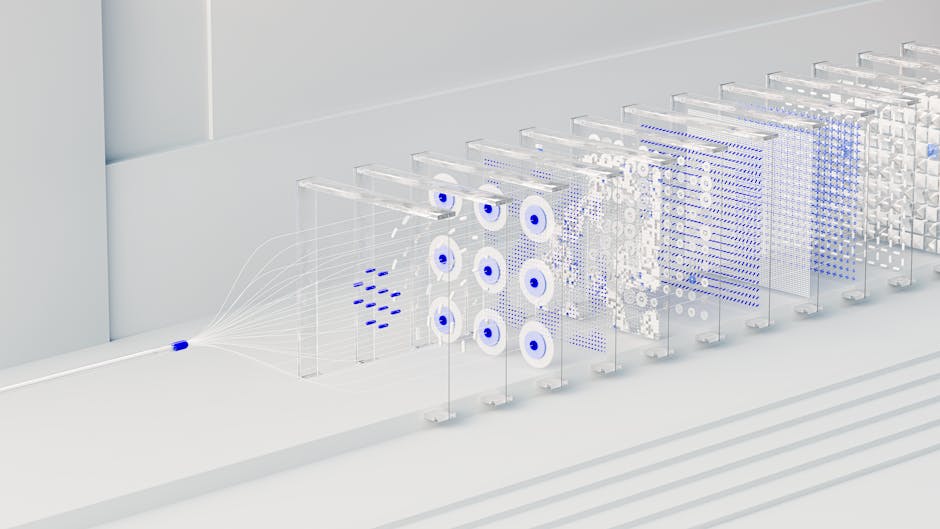

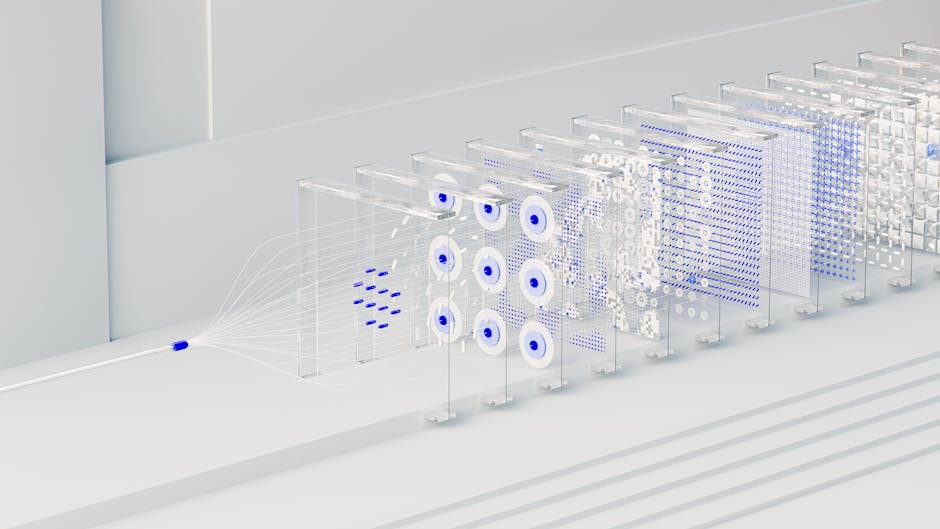

Deep Learning Integration

Combining Deep Learning with Reinforcement Learning enhances the capabilities of both. Deep neural networks act as function approximators, useful when dealing with high-dimensional state spaces. This integration allows RL to handle more abstract and intricate tasks.

Deep learning in RL:

- Approximates value functions and policies

- Enables handling of complex, high-dimensional inputs

- Allows for end-to-end learning from raw sensory inputs to optimal action outputs

Advantages of integration:

- Scalability with environment complexity

- Better generalization to unseen states

- Handling of continuous state and action spaces

- Effective feature extraction from raw data

Challenges include stability during training, addressed by techniques like Experience Replay and Target Networks.

The synergy of deep learning and reinforcement learning opens new possibilities in AI, addressing tasks once considered difficult. This combination forms the foundation for applications ranging from advanced gaming AIs to sophisticated autonomous systems and healthcare treatments.

Core Algorithms and Techniques

Deep Reinforcement Learning relies on several fundamental algorithms and techniques:

Q-Learning: A model-free algorithm focusing on learning action values directly. It updates Q-values using the formula:

Q(s, a) ← Q(s, a) + α [R + γ maxa' Q(s', a') - Q(s, a)]

Deep Q-Networks (DQNs): Address scaling challenges by using deep neural networks to approximate the Q-value function. Key improvements include Experience Replay and Target Networks.

Policy Gradient Methods: Directly optimize the policy. REINFORCE (Monte Carlo Policy Gradient) is a notable example, updating policy parameters to maximize expected cumulative reward.

Actor-Critic Methods: Combine Policy Gradient and value-based methods. Advantage Actor-Critic (A2C) uses an Actor to adjust the policy and a Critic to evaluate actions.

Bellman Equations: Form the basis of RL by formalizing how the value of a state relates to subsequent states. The Bellman expectation equation for the value function is:

Vπ(s) = 𝔼π [ Rt + γ Vπ(st+1) | st = s ]

Markov Decision Processes (MDP): Provide the essential framework for RL problems, defining states, actions, transition functions, reward functions, and discount factors.

These core algorithms and frameworks enable agents to adapt and excel across increasingly complex scenarios, from mastering video games to controlling autonomous vehicles1.

Applications of Deep Reinforcement Learning

Deep Reinforcement Learning (DRL) has found practical applications across various industries:

- Gaming: DRL has achieved breakthroughs in complex games like Go (AlphaGo) and Dota 2 (OpenAI Five), demonstrating superhuman performance through self-play and strategic decision-making.

- Robotics: DRL enables robotic arms to learn grasping and manipulation skills through trial and error, improving their adaptability in manufacturing and assembly lines.

- Autonomous Vehicles: Companies like Waymo and Tesla use DRL for lane keeping, adaptive cruise control, and complex driving maneuvers, learning from simulated environments to improve real-world performance.

- Healthcare: DRL optimizes radiation therapy for cancer treatment and aids in developing personalized treatment plans based on patient data.

- Finance: DRL powers automated trading systems and robo-advisors that adapt to market dynamics and guide investment decisions.

- Energy Management: DRL-based systems manage smart grids, balancing supply and demand and integrating renewable energy sources. Google's DeepMind achieved a 40% reduction in data center cooling energy usage using DRL.

- Retail and E-commerce: DRL enhances recommendation systems and optimizes supply chains, improving customer experiences and operational efficiency.

- Natural Language Processing: DRL advances chatbots and language translation services, improving accuracy and context-awareness.

- Industrial Automation: DRL automates processes like predictive maintenance and quality control, reducing downtime and costs in manufacturing.

- Education: DRL powers personalized learning systems that adapt to individual student needs, optimizing educational outcomes.

- Space Exploration: NASA uses DRL to develop autonomous systems for space exploration, allowing rovers to navigate challenging environments with minimal human intervention.

As research advances and computational power grows, DRL will likely unlock even more innovative applications across industries.

Challenges and Limitations

Despite its potential, Deep Reinforcement Learning (DRL) faces several challenges:

- Sample efficiency: DRL algorithms require substantial data to learn effectively, which can be impractical in real-world scenarios.

- Exploration-exploitation balance: Agents must explore their environment to discover profitable actions while avoiding excessive exploration that leads to inefficient learning.

- Training stability: DRL models often experience performance fluctuations due to inconsistent learning signals, making the training process unpredictable.

- Reality gap: Discrepancies between simulated training environments and real-world scenarios can lead to poor transferability of trained models.

Addressing these challenges through improved data generation methods, optimized exploration strategies, stable learning processes, and bridging the reality gap will be crucial for advancing DRL's practical applications.

Future Developments and Trends

The future of Deep Reinforcement Learning (DRL) is likely to include:

- Advanced algorithms: Improved versions of Proximal Policy Optimization (PPO) and Trust Region Policy Optimization (TRPO) may offer better stability and efficiency.

- Hybrid approaches: Combining model-based and model-free methods to leverage the strengths of both, such as Model-Agnostic Meta-Learning (MAML).

- Transfer learning: Enabling models to adapt quickly to new tasks with minimal data, reducing training time and resources.

- Complex problem-solving: Tackling more intricate scenarios in autonomous systems, healthcare, and natural language processing.

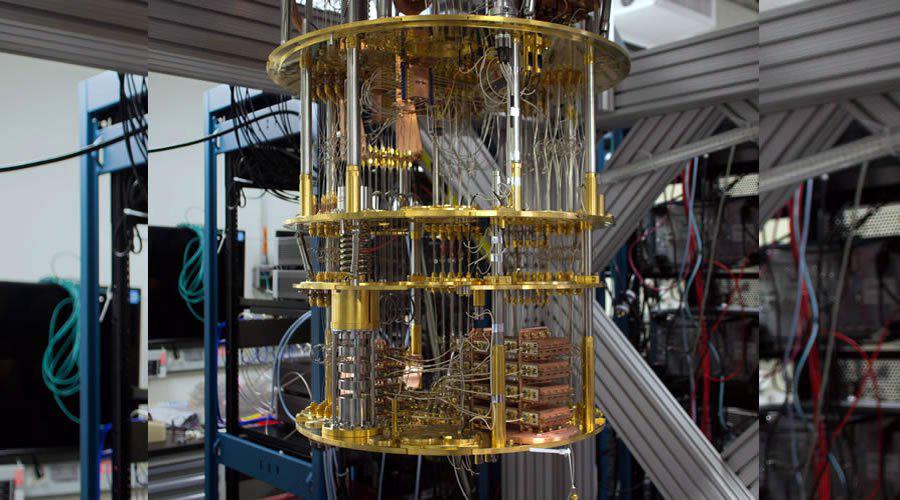

- Integration with quantum computing: Accelerating training processes and opening new possibilities in fields like cryptography and drug discovery.

- Environmental applications: Optimizing smart sensor deployment for wildlife protection and resource management.

- Ethical considerations: Ensuring alignment with human values and developing Explainable AI (XAI) for transparency in decision-making processes.

These advancements will likely expand DRL's capabilities and impact across various industries, transforming technology applications in our daily lives.

As research progresses and new techniques emerge, Deep Reinforcement Learning continues to show promise in solving complex problems and improving efficiency across numerous fields. The potential for DRL to revolutionize industries is exemplified by its success in beating human champions in games like Go1 and its application in reducing energy consumption in data centers2.

Revolutionize your content with Writio – an AI writer for website publishers and blogs. This article was crafted by Writio.