History and Evolution of Self-Driving Cars

Self-driving cars have progressed significantly since their inception. The journey began with ALVINN in 1989, which relied on neural networks to detect lane lines and navigate. Today, deep learning has revolutionized autonomous vehicles, enabling them to perform complex tasks.

Modern self-driving cars utilize deep learning algorithms to interpret data from various sensors, including:

- Cameras

- LiDAR

- RADAR

Each sensor serves a specific purpose:

- Cameras provide visual input for object detection and classification

- LiDAR offers depth perception

- RADAR helps track moving objects in various weather conditions

Deep learning has transformed perception systems in self-driving cars. Lane detection, pedestrian recognition, and traffic sign identification benefit greatly from convolutional neural networks (CNNs). Algorithms like YOLO and EfficientDet have advanced object detection, balancing speed and accuracy.

Localization and mapping are achieved through techniques like Visual Odometry and SLAM, which use sensor data to determine a car's position and map the environment in real-time. Deep learning aids in improving these systems by enhancing accuracy.

In vehicle planning and decision-making, neural networks help predict the behavior of nearby vehicles and pedestrians. Deep reinforcement learning further enhances decision-making, allowing self-driving cars to learn optimal driving strategies over time.

Control systems use deep learning for generating smooth and responsive driving maneuvers. Throughout this evolution, companies like Tesla, Waymo, and startups have harnessed deep learning to advance autonomous driving technology.

Deep Learning in Perception Systems

Deep learning's integration in perception systems marks a significant advancement in refining the capabilities of self-driving cars. Cameras employ convolutional neural networks (CNNs) to execute essential tasks like object detection, classification, and segmentation. They provide a detailed view of the environment, identifying everything from traffic signs to pedestrians with accuracy.

LiDAR technology contributes depth information by using laser pulses to gauge distances, creating intricate 3D maps of the environment. This data is particularly useful in poor lighting conditions or at night.

RADAR excels in tracking moving objects regardless of weather conditions, using radio waves to ascertain object speed and range. This characteristic makes it invaluable in environments where cameras and LiDAR may struggle, such as in heavy fog or rain.

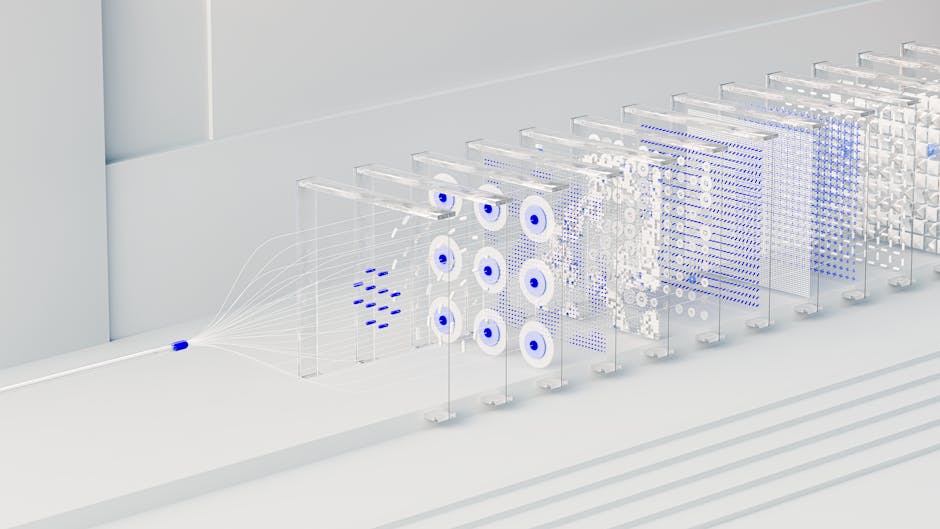

Sensor fusion combines data from cameras, LiDAR, and RADAR, creating a complete representation of the vehicle's environment. This process integrates these disparate data sources, utilizing deep learning to combine them seamlessly, thereby enhancing the system's effectiveness.

CNNs form the backbone of object detection and classification in self-driving cars, learning and adapting from extensive datasets to improve recognition accuracy continually. By synthesizing information from multiple sensors and leveraging the power of CNNs, self-driving cars perceive their surroundings with precision and react appropriately.

Localization and Mapping Techniques

Localization and mapping are essential components of modern autonomous driving, directly influencing the precision and safety of self-driving cars. These processes involve determining a vehicle's exact position and orientation relative to its environment.

Simultaneous Localization and Mapping (SLAM) allows a vehicle to construct or update a map of an unknown environment while simultaneously keeping track of its location within that map. SLAM uses data from various sensors to build detailed maps, enabling the vehicle to navigate with precision.

Visual Odometry complements SLAM by providing detailed motion estimates through continuous analysis of video input. This technique involves tracking distinctive features across sequential image frames to estimate the vehicle's position changes over time.

Deep learning has enhanced these techniques by improving their accuracy and reliability. In SLAM, deep learning enhances feature extraction capabilities, allowing for better scene comprehension. In Visual Odometry, deep learning improves feature matching and motion estimation.

Integrating deep learning into these localization methods optimizes their collaboration. When used together, SLAM provides the spatial contextual framework, while Visual Odometry delivers precise temporal adjustments, forming an effective localization and mapping system.

Planning and Decision-Making Algorithms

Planning and decision-making algorithms in autonomous vehicles analyze environmental inputs and predict future states to determine optimal actions. These algorithms must handle numerous variables while adhering to traffic laws and safety protocols.

Reinforcement learning plays a significant role in this process, teaching models to make sequences of decisions through trial and error. In self-driving cars, reinforcement learning is used to develop driving policies that maximize safety and efficiency.

Prediction models forecast the movements of other road users, such as vehicles, pedestrians, and cyclists. Through methods such as sequence-to-sequence learning and trajectory prediction, these systems assess potential future states of the environment, allowing the vehicle to preemptively adjust its path.

The integration of these algorithms enables autonomous vehicles to navigate complex urban landscapes. High-level planning involves mapping an efficient route, while lower-level decision-making is devoted to executing specific tasks, such as lane merges or traffic light recognition.

The combination of reinforcement learning and predictive modeling provides self-driving cars with an intelligent navigation system that can respond to their immediate surroundings and anticipate changes. Through the continued refinement of these technologies, autonomous vehicles are becoming more reliable and safer.

Challenges and Future Directions

Current challenges in autonomous vehicle technology include:

- Sensor limitations

- High costs

- Regulatory uncertainties

Sensors can struggle in adverse conditions, and their integration remains complex and expensive. Regulatory frameworks vary across regions, creating a patchwork of requirements that hinder development and deployment.

Future trends in autonomous vehicle technology are being shaped by advances in deep learning applications. Emerging methods such as unsupervised and semi-supervised learning can reduce reliance on massive labeled datasets, expediting the training process and enabling faster adaptation to new environments.

The enhanced use of generative models can simulate various driving conditions and scenarios to improve training processes. This approach can mitigate the limitations presented by real-world data collection by exposing self-driving systems to a wide array of corner cases and rare events.

The integration of 5G networks will pave the way for advances in vehicle-to-everything (V2X) communication, enhancing self-driving cars' ability to interact with their environment and improving traffic management, safety, and efficiency.

Addressing these challenges while leveraging advancements in deep learning will push the boundaries of autonomous vehicle technology. Collaboration among technologists, regulators, and target markets is crucial to overcome existing barriers and integrate autonomous mobility into our daily lives.

Writio: Your AI writing assistant for top-quality content creation. This page was written by Writio.

- Pomerleau DA. ALVINN: An autonomous land vehicle in a neural network. In: Advances in neural information processing systems. 1989:305-313.

- Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: Unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2016:779-788.

- Tan M, Pang R, Le QV. EfficientDet: Scalable and efficient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2020:10781-10790.

- Mur-Artal R, Montiel JM, Tardos JD. ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE transactions on robotics. 2015;31(5):1147-1163.

- Sallab AE, Abdou M, Perot E, Yogamani S. Deep reinforcement learning framework for autonomous driving. Electronic Imaging. 2017;2017(19):70-76.