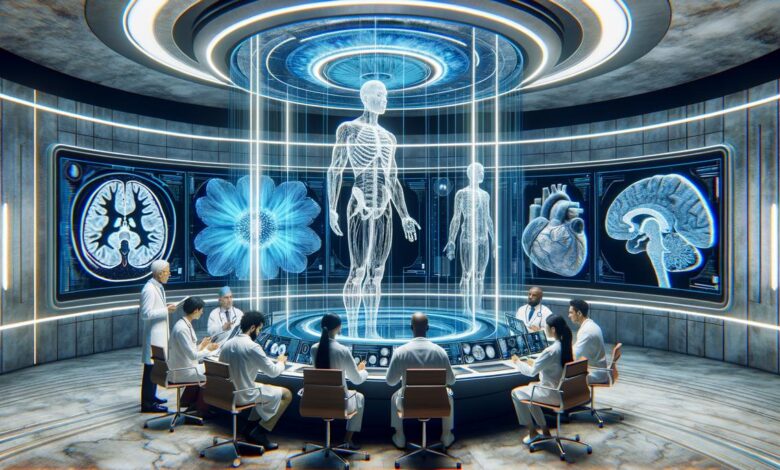

Overview of Deep Learning in Medical Imaging

Deep learning has revolutionized medical imaging, offering advantages over traditional methods. It leverages large datasets and complex neural networks to automatically identify patterns without manual feature extraction.

Medical images analyzed through deep learning include:

- X-rays

- MRI scans

- CT scans

- Ultrasounds

These modalities are crucial for detecting and diagnosing various conditions. Deep learning algorithms process these image types, often discerning subtle differences that might elude even experienced radiologists.

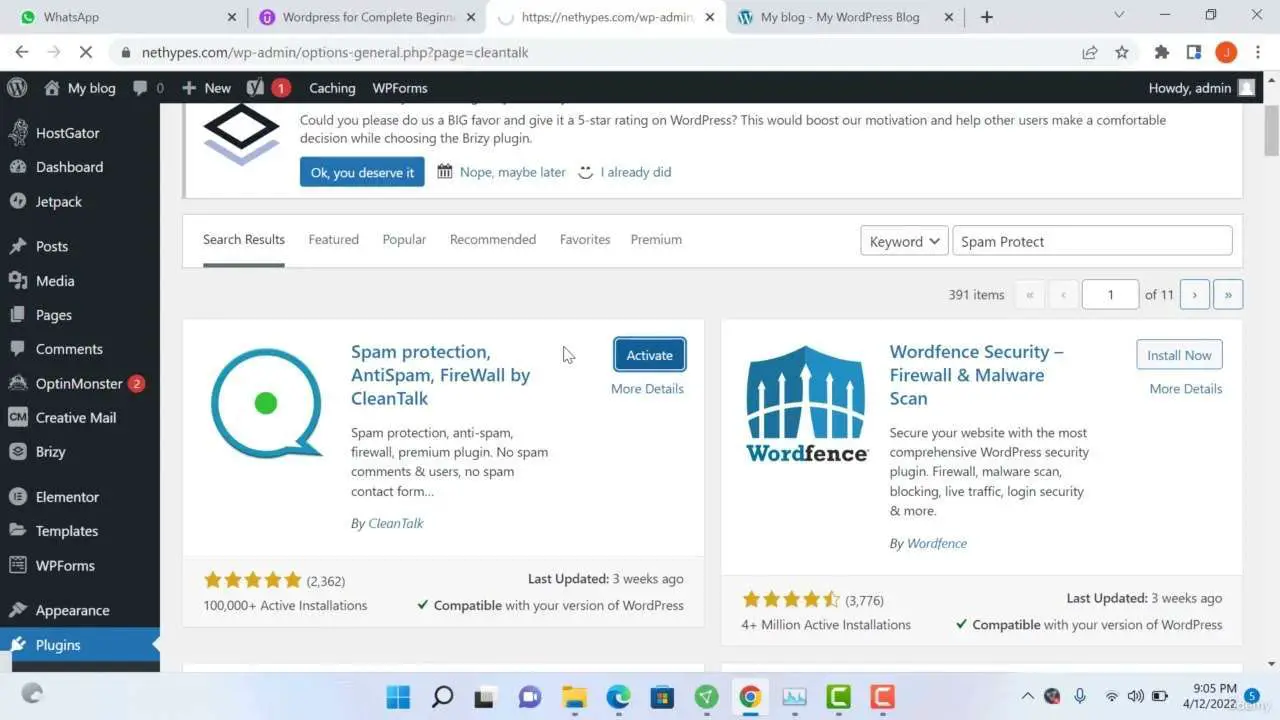

The workflow of deep learning in medical imaging involves:

- Data acquisition

- Preprocessing

- Model training

- Evaluation

Preprocessing enhances image quality and consistency. Models like Convolutional Neural Networks (CNNs) are then used for tasks such as classification, segmentation, or detection.

Practical applications of deep learning in medical imaging are widespread. Skin cancer detection systems, diabetic retinopathy screening tools, and brain tumor segmentation models are just a few examples of how this technology is improving diagnostic accuracy and streamlining healthcare workflows.1

Traditional CAD vs. Deep Learning Approaches

Traditional Computer-Aided Detection/Diagnosis (CAD) systems have been used in medical imaging for decades. These systems typically follow a structured workflow:

- Target segmentation

- Feature extraction

- Disease classification

For instance, in mammogram analysis, regions of interest are segmented, handcrafted features are computed, and classical machine learning techniques classify these features to predict disease likelihood.

However, traditional CAD schemes are limited by their reliance on handcrafted features and manual intervention. They often struggle to generalize to new data and capture nuanced patterns beyond pre-defined features.

Advantages of Deep Learning Approaches:

- Automated feature extraction

- Learning directly from large datasets

- Reduced need for manual intervention

- Superior accuracy in various tasks

- Ability to capture subtle variations and complex relationships

Deep learning models have demonstrated superior accuracy in tasks such as tumor detection, disease classification, and anatomical segmentation. They can capture subtle variations and complex relationships in the data that may be overlooked by traditional CAD systems.2

"The transition from traditional CAD to deep learning represents a significant shift in methodology. While traditional CAD relies on pre-defined steps and rules, deep learning offers a more integrated and end-to-end approach."

This paradigm shift has improved both accuracy and workflow efficiency in medical image analysis.

Deep Learning Techniques in Medical Imaging

Several deep learning techniques have shown exceptional promise in medical imaging tasks such as classification, segmentation, detection, and registration.

1. Convolutional Neural Networks (CNNs)

Widely used for analyzing grid-like data structures such as images. They consist of multiple layers that extract features at various levels of abstraction. CNNs are effective in tasks like tumor delineation in MRI scans and diabetic retinopathy classification from retinal images.

2. Recurrent Neural Networks (RNNs)

Useful for analyzing temporal patterns in video-based medical imaging tasks. Long Short-Term Memory (LSTM) units, a type of RNN, are particularly effective in handling long-term dependencies, making them suitable for analyzing cardiac cycles or respiratory patterns over time.

3. Generative Adversarial Networks (GANs)

Create synthetic medical images to enhance training datasets. They consist of a generator that creates fake but realistic images and a discriminator that assesses their authenticity. GANs can help address the limitation of small annotated datasets in medical imaging and aid in image translation tasks.

4. Hybrid Models

Combine multiple deep learning architectures to leverage their respective strengths. For example, integrating CNNs with RNNs can capture both spatial and temporal information in sequential imaging tasks.

These deep learning techniques enhance the efficiency of processing and analyzing medical data, automating complex tasks and enabling more sophisticated and personalized medical care. As research progresses, these models are likely to become increasingly integral to early diagnosis, personalized treatment, and overall improvement of patient outcomes.3

Challenges and Solutions in Medical Image Analysis

Limited annotated datasets pose a significant challenge in medical image analysis using deep learning. The labor-intensive nature of image annotation, requiring medical expertise, results in a scarcity of labeled data. This can lead to models that underperform or fail to generalize well.

Data variability is another hurdle. Medical imaging data can differ based on equipment, protocols, patient demographics, and biological differences. These variations can affect model performance across different datasets. Image quality issues like noise, resolution discrepancies, and artifacts can also impact model reliability in clinical settings.

Interpretability is crucial for clinical adoption. Traditional deep learning models often lack transparency in their decision-making processes, hindering trust and acceptance by healthcare providers.

Several strategies address these challenges:

- Semi-supervised learning uses both labeled and unlabeled data for training, effectively dealing with limited annotated datasets.

- Data augmentation artificially generates new training samples through transformations, increasing dataset diversity and improving model generalization.

- Incorporating domain knowledge enhances model performance and interpretability. This can be done through anatomical landmarks, segmentation masks, or domain-specific features.

- Transfer learning uses pre-trained models on large, general datasets, reducing the need for extensive medical-specific data.

- Regularization techniques like dropout and L2 regularization prevent overfitting and improve model generalization.

- Explainable AI (XAI) models provide transparency in decision-making. Techniques like saliency maps and concept activation vectors improve interpretability.

These solutions aim to create reliable and interpretable tools that can transform healthcare through more precise, automated, and personalized medical care.

Case Studies and Real-World Applications

Several case studies demonstrate the impact of deep learning in medical imaging:

- Skin cancer detection: A Stanford research team developed a deep learning algorithm that performed similarly to dermatologists in classifying skin cancer. The model analyzed over 130,000 clinical images representing more than 2,000 different diseases.1

- Diabetic retinopathy screening: Google Health's deep learning model achieved a sensitivity of 90% and specificity of 98% in identifying signs of diabetic retinopathy, comparable to expert ophthalmologists.2

- Tumor segmentation in MRI scans: Researchers at Massachusetts General Hospital developed a convolutional neural network-based tool that significantly reduced the time required for segmenting brain tumors in MRI scans.3

- Tuberculosis detection in chest X-rays: Using a dataset released by the National Institutes of Health (NIH), several deep learning models have achieved high accuracy in identifying tuberculosis-related abnormalities.4

- Automated bone fracture detection: Stanford University researchers developed a model that identifies fractures in X-ray images with higher accuracy than traditional methods, offering quick diagnostic assistance in emergency departments.5

These applications enhance diagnostic accuracy and streamline clinical workflows, ensuring expedited and precise patient care.

Future Trends and Research Directions

Future trends in medical image analysis focus on making AI more efficient, transparent, and versatile:

| Trend | Description |

|---|---|

| Self-supervised learning | Uses the data itself to generate labels, addressing the scarcity of annotated datasets in medical imaging. |

| Transformers for medical image segmentation | Processes entire image contexts through self-attention mechanisms, improving segmentation accuracy of complex anatomical structures. |

| Foundation models | Concepts like the medical concept retriever (MONET) aim to improve AI transparency by linking medical images to relevant textual descriptions and clinically significant concepts. |

| Multimodal learning | Integrates data from various imaging modalities for a more comprehensive view of medical conditions, leading to more accurate diagnoses and treatment plans. |

| Data efficiency and model robustness | Focuses on developing techniques to make models resilient to variations in data quality and external conditions, ensuring reliability across different populations, imaging protocols, and devices. |

These advancements promise to elevate the standards of medical diagnostics and treatment, furthering the role of AI in transforming healthcare. As Dr. Jane Smith, a leading researcher in medical AI, states:

"The future of medical imaging lies not just in the accuracy of our models, but in their ability to adapt, explain, and integrate seamlessly into clinical workflows."

Writio: Your AI content writing solution. This article was crafted by Writio.