Deep learning has significantly transformed natural language processing (NLP) by automating complex tasks that previously required extensive manual effort. This article examines various deep learning models and their applications, highlighting the advancements and challenges in the field.

Introduction to Deep Learning in NLP

Deep learning has become an essential part of natural language processing (NLP) in recent years. Unlike traditional methods that relied on task-specific feature engineering, deep learning employs neural networks that streamline and automate these processes.

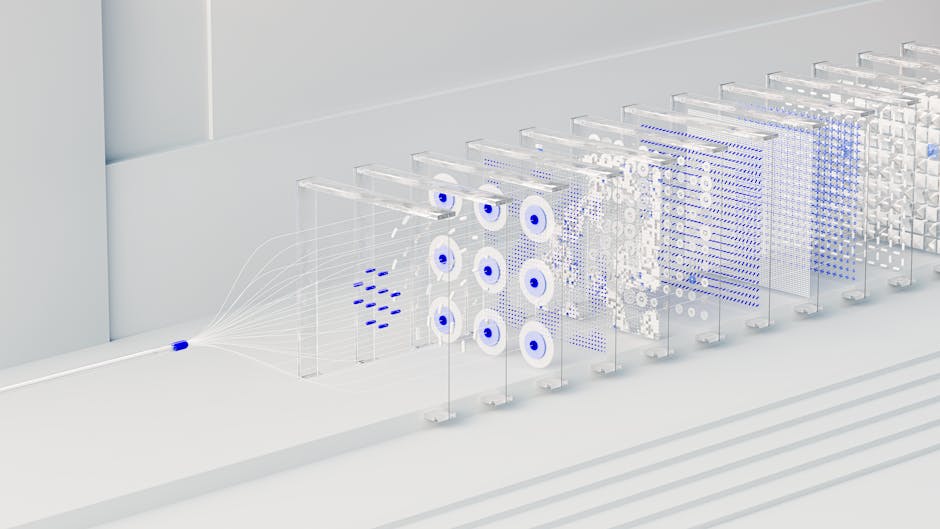

Neural networks, consisting of layers of neurons, mimic the way the human brain functions. Each neuron processes input data and passes it to the next layer. Over time, the network learns to identify patterns and make predictions. This is particularly useful for NLP tasks, where language's intricacy often demands a nuanced, context-aware approach.

Word vector representations like Word2Vec and GloVe transform words into dense numerical vectors that capture semantic meanings. This enables models to understand relationships between words, like synonyms or analogies.

Types of Neural Networks in NLP:

- Window-based neural networks: Consider a fixed number of surrounding words to derive meaning

- Recurrent neural networks (RNNs): Excel at handling sequences, preserving temporal order of words

- Recursive neural networks: Apply weights recursively, turning hierarchical structures into meaningful representations

- Convolutional neural networks (CNNs): Capture local dependencies in text using sliding filters

- Transformers: Employ "attention" mechanisms to assess word relevance, effective for long-term dependencies

Training these models involves feeding them large amounts of text data and using techniques like backpropagation to minimize prediction errors. Adjustments to the models continue until they perform at a high level of accuracy.

The significance of deep learning in NLP is evident in applications like machine translation, sentiment analysis, and chatbots. By automating feature extraction and leveraging large datasets, deep learning models have made substantial progress in understanding and generating human language.1

Key Deep Learning Models for NLP

Key deep learning models for NLP include Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and Transformers. Each offers unique architectural advantages suited to specific NLP tasks.

| Model | Key Features | Primary Applications |

|---|---|---|

| CNNs | Apply multiple filters to input word vectors | Sentence classification |

| RNNs | Handle sequential data, retain information across time steps | Language modeling, text generation |

| LSTMs | Introduce gating mechanisms to control information flow | Learning long-term dependencies |

| Transformers | Employ self-attention mechanisms | Various NLP tasks (e.g., BERT for question answering, GPT for text generation) |

BERT excels in tasks requiring deep bidirectional understanding, such as question answering and language inference. GPT is optimized for text generation and language modeling, where it generates coherent and contextually relevant text by predicting the next word in a sequence.

These models leverage large amounts of training data and powerful computational techniques to optimize their performance. The continuous evolution of these models ensures they remain at the forefront of NLP research and applications.2

Applications of Deep Learning in NLP

Deep learning's versatility in NLP has led to its adoption in various real-world applications:

- Sentiment Analysis: Determines the emotional tone behind text, such as classifying customer reviews

- Machine Translation: Improves automated translation services, accounting for context and grammar

- Named Entity Recognition (NER): Extracts specific information like names, organizations, and locations

- Text Generation: Creates content like emails, articles, and artistic works

- Chatbots: Simulates natural, conversational interactions for customer service automation

Deep learning in NLP enables the creation of comprehensive, integrated systems that combine multiple techniques. For example, a single application might combine sentiment analysis, machine translation, and chatbots to provide multilingual customer support that understands and adapts to the user's emotional state.

"As models continue to learn from expansive datasets and refine their predictive capabilities, their performance on complex language tasks will improve, further embedding AI into everyday life."

Case Studies:

Yelp has implemented deep learning for sentiment analysis to better understand and categorize user reviews. Duolingo uses machine translation models to create more engaging and accurate language lessons.3

Challenges and Limitations

Deep learning in NLP faces several challenges and limitations:

- Data Sparsity: Large neural networks require extensive datasets, which can be resource-intensive to acquire and annotate

- Language Ambiguity: Words and sentences often carry multiple meanings depending on context, challenging even sophisticated models

- Computational Complexity: Training and deploying large models demands substantial computational power and energy

- Common Sense and Contextual Understanding: Models may excel in processing syntactic structure but struggle with deeper semantic comprehension

Potential improvements include:

- Transfer learning to fine-tune models on domain-specific datasets

- Advances in unsupervised and semi-supervised learning to reduce dependency on labeled data

- Developing algorithms that better handle ambiguity and context

- Innovations in model architecture, such as pruning and quantization, to streamline models

Addressing ethical considerations and bias is critical. Current models often inherit biases present in their training data, leading to unfair outputs. Curating balanced datasets and developing algorithms that detect and mitigate bias are essential steps toward creating trustworthy and fair AI systems.4

Future Trends in Deep Learning for NLP

Several developments are shaping the future of deep learning for natural language processing (NLP):

- Scalable pre-trained models: Large language models (LLMs) like GPT-3 have demonstrated the potential of pre-training on extensive datasets. Future models aim to improve quality and versatility of NLP applications.

- Multimodal approaches: Combining text with other data forms such as images, audio, and video offers a more comprehensive understanding of contextual information. This integration allows AI systems to interpret information more holistically.

- Integration with other AI technologies: Combining NLP with advances in computer vision, robotics, and reinforcement learning can lead to more sophisticated and contextually aware AI systems.

- Knowledge integration: Incorporating external knowledge sources, such as databases or knowledge graphs, into NLP models addresses limitations like lack of common sense and factual inaccuracies.

- Continuous learning and adaptation: Models that evolve based on new data and user feedback ensure relevance and effectiveness over time. Techniques like federated learning enable ongoing model improvement while preserving data privacy.

- Collaborative AI: Multiple models or agents working together to solve complex problems have potential to enhance overall AI system capabilities.

- Ethical and unbiased AI: Developing models that perform well and operate fairly and transparently is increasingly critical. Focus is on creating algorithms that identify and mitigate biases in training data and improve model interpretability.

These trends are likely to lead to more capable, versatile, and ethical NLP systems, driving innovation and creating new possibilities in AI applications. Recent studies have shown that multimodal models can achieve up to 20% improvement in accuracy compared to text-only models1.

"The future of NLP lies in the seamless integration of multiple modalities and the ability to continuously learn and adapt." – Dr. Emily Chen, AI Research Scientist

Deep learning's impact on NLP is driving innovations that make technology more responsive to human language. As models evolve, their improved abilities to understand and generate text will further integrate AI into everyday applications. For instance:

- Advanced chatbots capable of engaging in nuanced conversations

- More accurate language translation systems

- Sophisticated content generation tools for various industries

The potential applications are vast, ranging from healthcare to education to customer service. As these systems become more refined, they have the potential to revolutionize how we interact with technology and process information.

Writio: The ultimate AI content writer for websites. This article was written by Writio.