Understanding Interpretability and Explainability

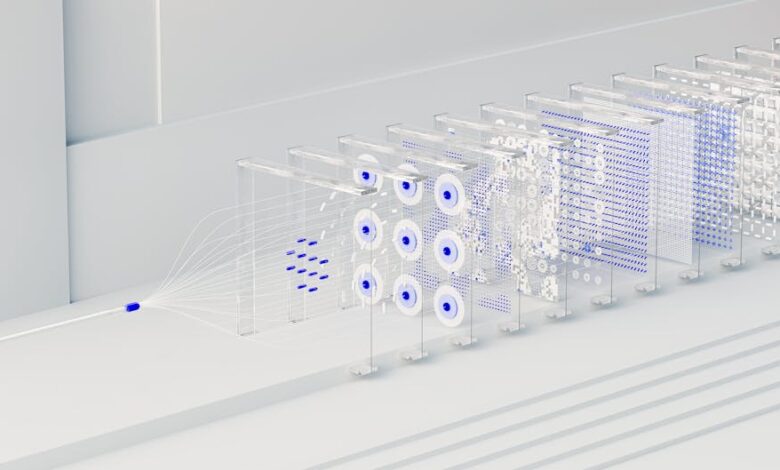

Interpretability and explainability are key aspects of AI that enhance its comprehensibility and reliability. Interpretability focuses on understanding an AI model's inner workings, akin to analyzing a complex machine piece by piece. Explainability, on the other hand, involves communicating AI decisions in plain language, making them accessible to a broader audience.

These concepts are crucial for several reasons:

- In critical scenarios, interpretability allows experts to inspect an AI's thought process

- Explainability breaks down decisions for non-experts

- Both help build trust in AI systems by making their decision-making processes more transparent

Various methods improve interpretability and explainability, including:

- Visualization tools

- Breaking down complex models into simpler components

- Example-based explanations

These approaches are essential in fields like medicine, where AI decisions can have significant impacts.

Importance in High-Stakes Industries

In high-stakes industries like healthcare and finance, interpretability and explainability are vital. For instance, a doctor relying on an AI model for treatment decisions needs to understand its reasoning to ensure it aligns with medical practices. In finance, transparency in AI-driven loan application processes is crucial for fairness and compliance.

These features address the fundamental need for trust and accountability, especially in sectors where AI-driven decisions are becoming more prevalent. Regulatory bodies often require traceable decision-making processes for AI systems, making interpretability and explainability essential for compliance.

The significance of these concepts is amplified in fields where the stakes are high. As industries continue to integrate AI, achieving clarity through interpretability and explainability will not only enhance trust but also drive innovation by empowering professionals to understand and harness AI's full potential.

Techniques for Enhancing Explainability

Several techniques are advancing explainability in AI:

- LIME (Local Interpretable Model-agnostic Explanations): Creates simpler models around specific predictions, allowing for a focused understanding of the AI's decision process.

- SHAP (SHapley Additive exPlanations): Determines each feature's contribution to a model's prediction, providing a comprehensive view of the decision-making process.

- Visualization methods: Include heat maps and saliency maps, which visually represent the most influential aspects of AI decisions.

These techniques bridge the gap between complex model operations and human understanding, making AI systems more predictable and reliable. They are particularly valuable in sectors where decisions can have significant consequences, promoting confidence by demonstrating how AI decisions stem from understandable factors.

Challenges and Limitations

Despite the benefits of explainable AI, several challenges persist:

- The 'black box' nature of deep learning models makes them difficult to interpret due to their complexity.

- There's a trade-off between model complexity and interpretability. As models become more intricate to boost accuracy, they become harder to explain.

- The lack of transparency raises questions about the reliability and stability of AI decisions, especially in high-stakes industries.

- Techniques that enhance explainability can be computationally intensive, potentially creating bottlenecks in real-time applications.

- Balancing privacy with interpretability is challenging, particularly when models are trained on sensitive data.

These challenges require ongoing efforts to bridge the gap between high-performing AI models and human understanding, ensuring that AI remains both effective and trustworthy.

Future Directions and Regulatory Compliance

The future of explainable AI (XAI) is likely to bring new methods and tools to address the complexities of deep learning models. Researchers are exploring innovative ways to make AI systems more transparent without sacrificing their capabilities.

Regulatory frameworks, such as the EU AI Act, are shaping the development of AI by emphasizing transparency and accountability. These regulations are driving the creation of standardized practices and benchmarks for AI transparency.

"80% of businesses cite the ability to determine how their model arrived at a decision as a crucial factor."

The push for explainability is transforming challenges into opportunities for innovation. Organizations that embrace these developments may gain a competitive advantage by integrating AI ethically and effectively into their operations.

As AI continues to evolve, the approach to interpretability and explainability must adapt to ensure these powerful tools are used responsibly across all sectors.

In high-stakes fields, understanding AI's decision-making process is crucial. By making AI's workings clearer, we can build trust and encourage responsible use, ensuring that these powerful tools are both effective and transparent.

Writio: Your AI content writer for websites and blogs. This post was crafted by Writio.

- IBM. Global AI Adoption Index. 2022.

- Adadi A, Berrada M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access. 2018;6:52138-52160.

- Bibal A, Lognoul M, de Streel A, Frénay B. Legal Requirements on Explainability in Machine Learning. Artificial Intelligence and Law. 2021;29:149-169.

- Doshi-Velez F, Kim B. Towards A Rigorous Science of Interpretable Machine Learning. arXiv preprint arXiv:1702.08608. 2017.

- Miller T. Explanation in Artificial Intelligence: Insights from the Social Sciences. Artificial Intelligence. 2019;267:1-38.