Understanding Autoencoders in Deep Learning: A Comprehensive Guide

In the ever-evolving landscape of deep learning, autoencoders stand out as powerful neural network architectures that excel in unsupervised learning tasks. These remarkable models have revolutionized how we approach data compression, feature extraction, and generative modeling. In this comprehensive guide, we’ll dive deep into autoencoders, exploring their architecture, types, applications, and implementation.

What is an Autoencoder?

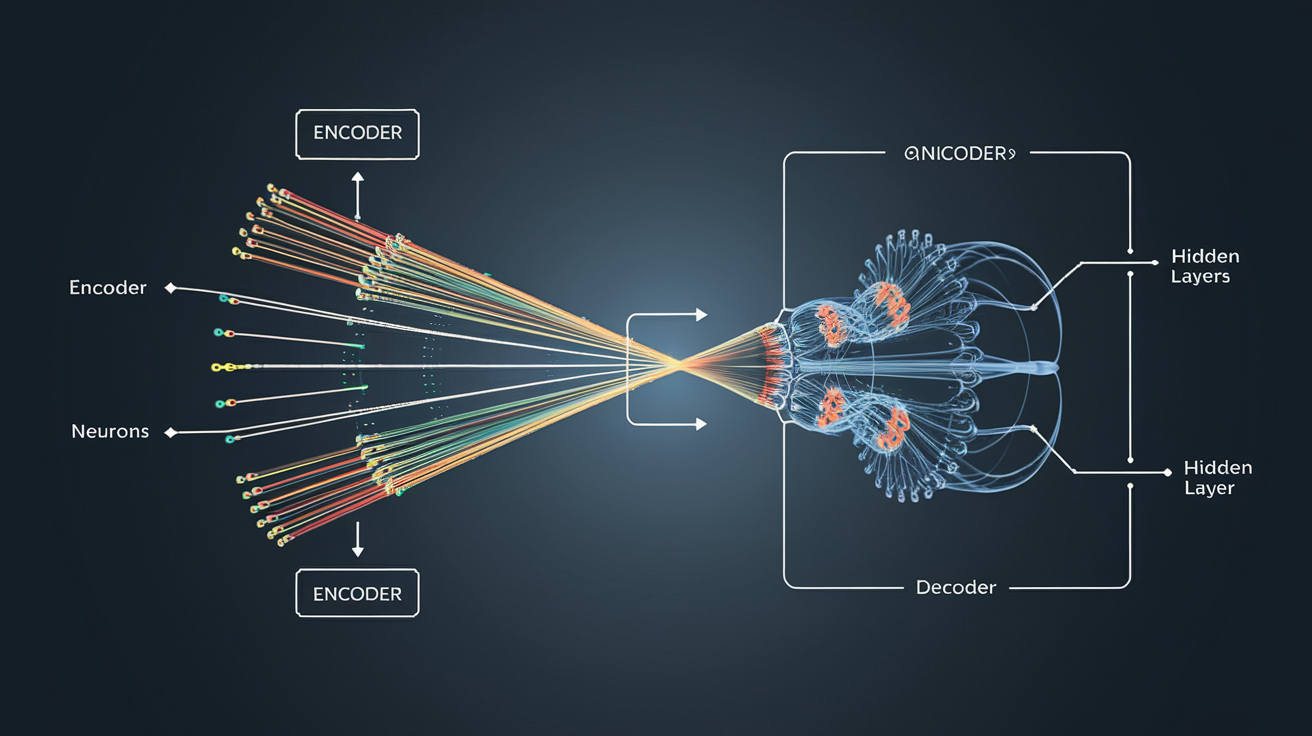

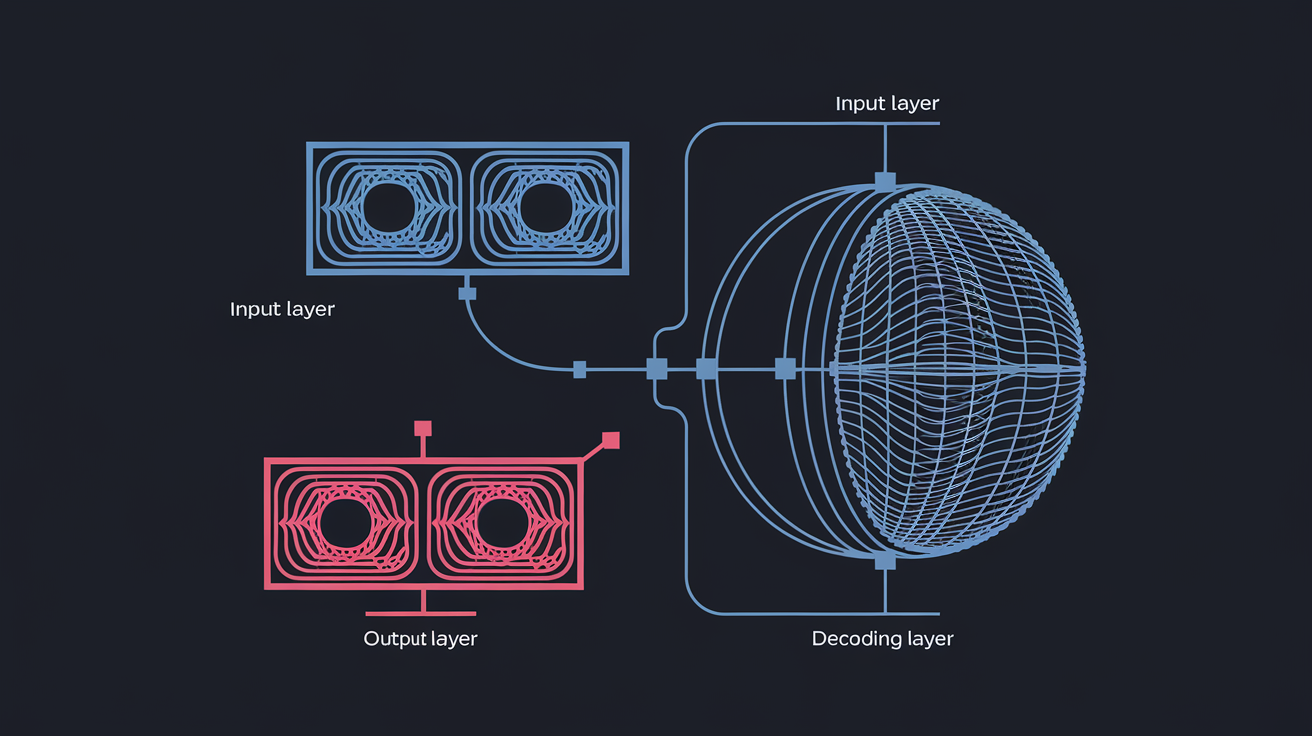

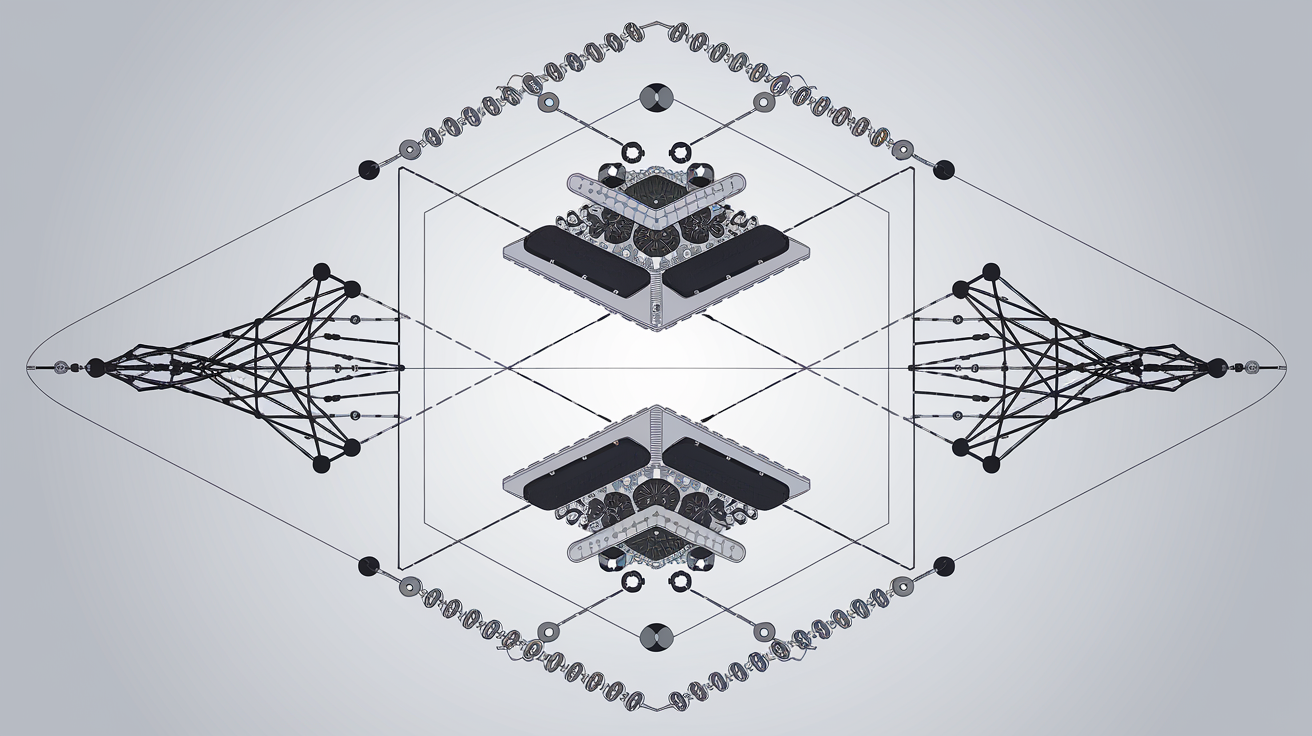

An autoencoder is a specialized type of neural network designed to learn efficient data representations without supervision. At its core, an autoencoder attempts to copy its input to its output through a compressed internal representation, forcing the network to learn the most important features of the data.

The magic of autoencoders lies in their unique architecture:

- An encoder that compresses input data into a lower-dimensional representation

- A bottleneck layer that contains the compressed knowledge representation

- A decoder that reconstructs the original input from the compressed representation

Architecture Deep Dive

Let’s break down the architecture of a basic autoencoder:

import tensorflow as tf

from tensorflow.keras import layers, Model

class BasicAutoencoder(Model):

def __init__(self, latent_dim):

super(BasicAutoencoder, self).__init__()

self.latent_dim = latent_dim

# Encoder

self.encoder = tf.keras.Sequential([

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(latent_dim, activation='relu')

])

# Decoder

self.decoder = tf.keras.Sequential([

layers.Dense(64, activation='relu'),

layers.Dense(128, activation='relu'),

layers.Dense(784, activation='sigmoid') # For MNIST data

])

def call(self, x):

encoded = self.encoder(x)

decoded = self.decoder(encoded)

return decodedTypes of Autoencoders

1. Vanilla Autoencoder

The basic form of autoencoder that learns to compress and reconstruct data through a bottleneck layer.

2. Denoising Autoencoder

These autoencoders are trained to reconstruct clean data from corrupted input. They take corrupted data as input and learn to reconstruct the original, clean version. They’re valuable for image and audio denoising tasks.Here’s a simple implementation:

def add_noise(x, noise_factor=0.3):

noise = tf.random.normal(shape=tf.shape(x), mean=0.0, stddev=1.0)

return x + noise_factor * noise

class DenoisingAutoencoder(Model):

def __init__(self, latent_dim):

super(DenoisingAutoencoder, self).__init__()

self.encoder = tf.keras.Sequential([

layers.Dense(128, activation='relu'),

layers.Dense(latent_dim, activation='relu')

])

self.decoder = tf.keras.Sequential([

layers.Dense(128, activation='relu'),

layers.Dense(784, activation='sigmoid')

])

def call(self, x):

# Add noise to input

noisy_x = add_noise(x)

# Encode and decode

encoded = self.encoder(noisy_x)

decoded = self.decoder(encoded)

return decoded3. Variational Autoencoder (VAE)

VAEs add a probabilistic twist to autoencoders by learning the parameters of a probability distribution representing the data.

They impose a probabilistic structure on the latent space, facilitating the generation of new, coherent data. They’re useful in generative modeling tasks like creating new images or text.

4. Sparse Autoencoder

These enforce sparsity constraints on the hidden layers, leading to more efficient feature learning.

They apply sparsity constraints during training, encouraging only a fraction of neurons to be active. This approach is useful for capturing diverse features in scenarios like anomaly detection.

Applications of Autoencoders

Dimensionality Reduction

- Compress high-dimensional data while preserving important features

- Useful for visualization and data preprocessing

Anomaly Detection is another area where autoencoders show utility. Trained to reconstruct data, autoencoders can identify anomalies by assessing reconstruction errors. This application is beneficial in; Cybersecurity, Manufacturing, Fraud detection,in financial transactions, Healthcare (analyzing electronic health records), Predictive maintenance (analyzing sensor data from industrial equipment)

def detect_anomalies(model, data, threshold):

reconstructed = model.predict(data)

mse = np.mean(np.square(data - reconstructed), axis=1)

return mse > thresholdImage Denoising

Feature Learning

Data Generation

Training Best Practices

Choose the Right Architecture

- Match the architecture to your data type

- Consider the complexity of your data

Optimize Hyperparameters

# Example hyperparameter configuration

learning_rate = 0.001

batch_size = 32

epochs = 50

latent_dim = 64

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate)

model.compile(optimizer=optimizer, loss='mse')Monitor Training Progress

- Use validation setsTrack reconstruction errorVisualize reconstructed outputs

- Use validation sets

- Track reconstruction error

- Visualize reconstructed outputs

Implementation Example: MNIST Dataset

Let’s implement a complete example using the MNIST dataset:

# Load and preprocess data

(x_train, _), (x_test, _) = tf.keras.datasets.mnist.load_data()

x_train = x_train.reshape(-1, 784).astype('float32') / 255.0

x_test = x_test.reshape(-1, 784).astype('float32') / 255.0

# Create and train the model

autoencoder = BasicAutoencoder(latent_dim=32)

autoencoder.compile(optimizer='adam', loss='mse')

history = autoencoder.fit(

x_train, x_train,

epochs=20,

batch_size=256,

validation_data=(x_test, x_test)

)

# Visualize results

encoded_imgs = autoencoder.encoder(x_test).numpy()

decoded_imgs = autoencoder.decoder(encoded_imgs).numpy()Advanced Techniques: JumpReLU SAE

A recent advancement in autoencoder technology is the JumpReLU Sparse Autoencoder (SAE), which introduces dynamic feature selection mechanisms. This approach improves both performance and interpretability by:

- Implementing dynamic threshold adjustment

- Optimizing feature activation

- Reducing “dead features”

- Enhancing network interpretability

Frequently Asked Questions (FAQs) about Autoencoders in Deep Learning

What is an autoencoder?

- is a specialized type of neural network designed to learn efficient data representations without supervision.

How do autoencoders work?

- Autoencoders consist of two main components: an encoder that compresses the input data into a lower-dimensional representation and a decoder that reconstructs the original data from this compressed form.

What are the different types of autoencoders?

- Common types include vanilla autoencoders, denoising autoencoders, sparse autoencoders, and variational autoencoders.

What are the applications of autoencoders?

- Applications include image denoising, anomaly detection, data compression, and dimensionality reduction.

Are autoencoders supervised or unsupervised?

- Autoencoders are primarily unsupervised learning models, as they do not require labeled data for training.

What is latent space in an autoencoder?

- Latent space refers to the compressed representation of the input data that captures its essential features.

How do you train an autoencoder?

- Training involves minimizing the reconstruction loss between the original input and the reconstructed output using techniques like backpropagation.

What is reconstruction loss?

- Reconstruction loss measures the difference between the original input and the output generated by the decoder, guiding the training process.

Can autoencoders be used for anomaly detection?

- Yes, autoencoders can identify anomalies by detecting deviations in reconstruction error from normal patterns in the data.

What are some limitations of using autoencoders?

- Limitations include potential overfitting, difficulty in interpreting latent representations, and sensitivity to noise in training data.

Conclusion

Autoencoders represent a powerful tool in the deep learning toolkit, offering versatile solutions for various data processing challenges. From basic data compression to advanced generative modeling, their applications continue to expand as new variants and techniques emerge.

Remember that the key to success with autoencoders lies in understanding your specific use case and choosing the appropriate architecture and hyperparameters accordingly.

In-case you have faced any difficult, please make a good use of the comment section i will personal be there to help you when you are stuck. also you can use the FAQs section below to understand more.

We think sharing practical implementation on real world example various machine learning skill is the key point to mastery and also solve various problem that affect our society we are intended to teach you through practical means if you think our idea is good. Please and please leave us a comment below about your views or request an article. as usual don’t forget to up-vote this article and share it.