Basics of Neural Networks

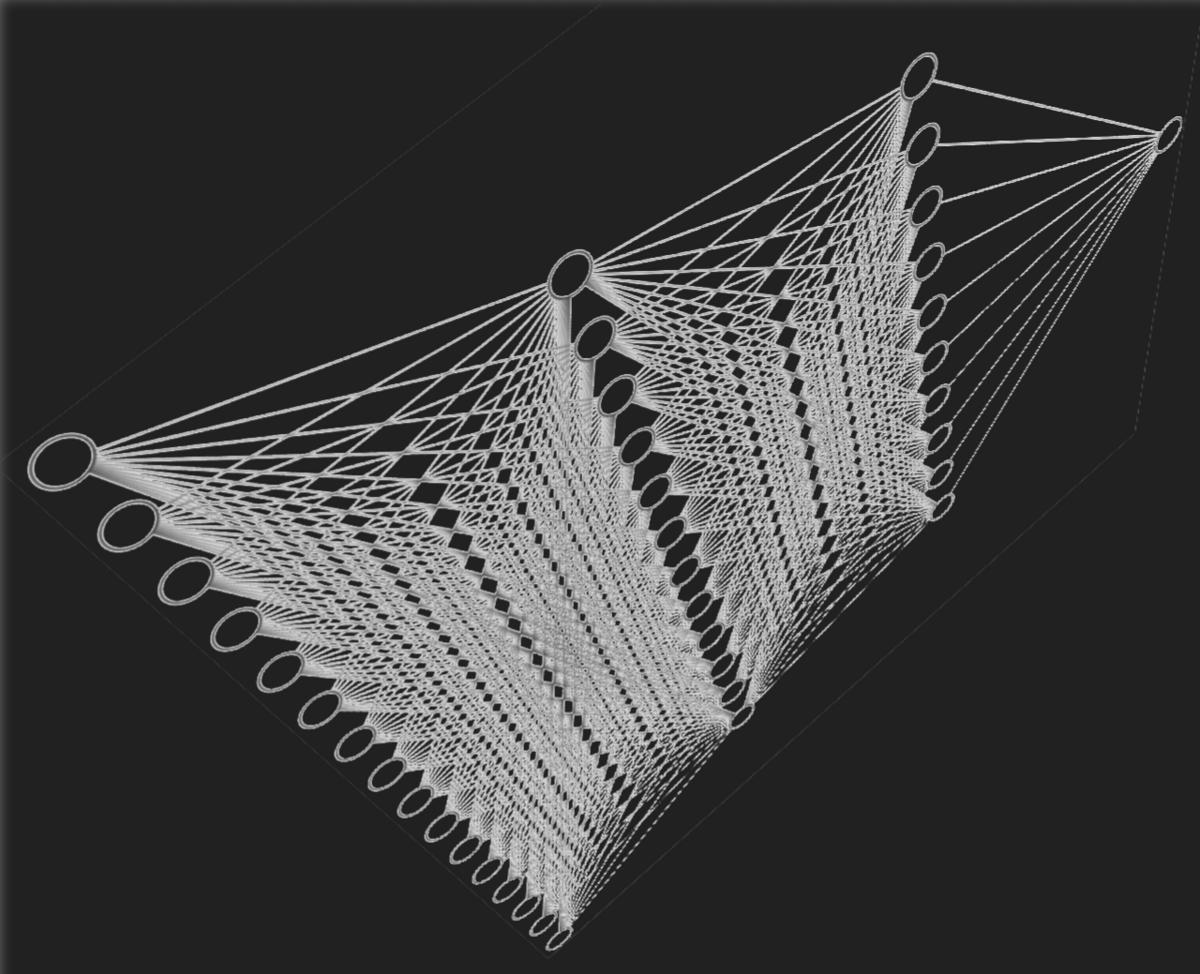

Artificial Neural Networks (ANNs) are structured in a way that mirrors the basic functioning of the human brain. Just as the brain uses neurons to process and transmit information, ANNs use artificial neurons or nodes. These nodes are grouped into layers that comprise the input layer, one or more hidden layers, and an output layer.

The input layer receives the initial data, which could be anything from image pixels to audio data elements. This layer's main job is to pass these data points to the hidden layers, where the main computations occur. Each node in the hidden layers processes the inputs it receives through weighted connections. The nodes then apply an activation function, deciding how much of the received information passes through as output to the next layer—a critical step in refining data into something usable.

The final piece of this neural puzzle is the output layer, which outputs the result. After the rigorous refining process from input through hidden layers, the output layer translates this into a final decision form—be it identifying an object in a photo, deciding the next move in a game of chess, or diagnosing a medical condition from patient data.

These networks are trained using vast amounts of input data, iteratively adjusting the weights of connections via processes like backpropagation—wherein the system learns from errors to make better decisions next time.

Through such architectures and mechanisms, artificial neural networks hold the potential to transcend traditional computing's rigid bounds, enabling adaptable, nuanced interactions and decisions akin to human judgement but at electronic speeds.

Types of Neural Networks

Feed-forward neural networks are perhaps the simplest type of ANN. They operate in a straight line from input to output with no looping back. This means they can efficiently model relationships where current inputs dictate outputs, without any need for memory of previous inputs. Common applications include voice recognition and computer vision tasks where the immediate input data matters most.

Recurrent neural networks (RNNs) have loops in their architecture, allowing them to maintain a 'memory' of previous inputs. This looping structure can keep 'state' across inputs, making RNNs ideal for tasks such as:

- Sequence prediction

- Natural language processing

- Speech recognition

They excel at tasks where context from earlier data influences current decisions.

In the realm of image processing and certain video applications, convolutional neural networks (CNNs) shine. Leveraging a hierarchy of layers to analyse visual imagery, CNNs use convolutional layers to collect and process parts of an image, layers of pooling to reduce the size of the input, and fully connected layers to make predictions or classifications. CNNs are commonly used in applications like facial recognition, medical image analysis, and any task requiring object recognition within scenes.

Each type of network is suited to particular kinds of problems, based on their unique architectures and processing capabilities. Deciding which network to use will largely depend on the problem at hand, with each offering distinct methods for capturing relationships within data, showcasing the versatility and continually expanding applications of neural networks in artificial intelligence.

Learning Processes in ANNs

Artificial neural networks have learning procedures that enable them to proficiently improve over time. At the core of learning in ANNs lies the concept of training through exposure to vast datasets alongside the iterative adjustment of network weights and biases—a process governed fundamentally by algorithms like backpropagation and gradient descent.

In a typical ANN, the learning process starts with training data that includes expected output (supervised learning), which the network uses to adjust its weights. Backpropagation is used to calculate the gradient (degree of change) of the network's loss function—a measure of how far the network's prediction deviates from the actual output. Once these deviations are known, the gradient of the loss is fed back through the network to adjust the weights in such a way that the error decreases in future predictions.

Gradient descent, a first-order iterative optimization algorithm, plays a pivotal role in the weight-adjustment phase. During training, it determines the extent and direction that the weights should be corrected such that the next result is closer to expected output. These are incremental steps toward minimum error, which makes ANN predictions more accurate over time.

Activation functions within the nodes determine the output that feeds into the next layer or as a final prediction. These functions add a transformative non-linear aspect to the inputs of the neuron. Well-known activation functions include:

- Sigmoid: squashes input values into a range between 0 and 1

- ReLU (Rectified Linear Unit): allows only positive values to pass through it

The interplay between data exposure, backpropagation, gradient descent, and activation functions all make up a versatile framework that endows ANNs with their notable 'intelligence'. This continual learning ability mirrors an essential attribute of human learning, highlighted by an ever-growing sophistication ready to conquer tasks once deemed insurmountable for machines.

Real-World Applications of Neural Networks

Neural networks have paved the way for transformative changes across a spectrum of industries, harnessing their potential to interpret complex data in ways that mimic human cognition.

In healthcare, artificial neural networks are instrumental in enhancing diagnostic accuracy and patient care. Image recognition technology powered by convolutional neural networks is used extensively in analyzing medical imaging like MRIs and CT scans. These systems can identify minute anomalies that might be overlooked by human eyes, aiding in early diagnosis of conditions such as cancer or neurological disorders1. Machine learning models are also being developed to predict patient outcomes, personalize treatment plans, and aid in drug discovery.

The financial industry also reaps significant benefits from the predictive capabilities of neural networks. These systems are employed to detect patterns and anomalies that indicate fraud, enhancing the security of transactions. Neural networks are used for high-frequency trading, where they analyze vast arrays of market data to make automated trading decisions within milliseconds. Predictive analytics in finance also help institutions assess credit risk, personalize banking services, and optimize investment portfolios2.

Autonomous driving is another field where neural networks are making a substantial impact. Self-driving cars utilize a form of recurrent neural networks to process data from various sensors and cameras to safely navigate through traffic. This technology understands the vehicle's environment and can make predictive decisions, adjust to new driving conditions, and learn from new scenarios encountered on the road.

Neural networks facilitate enhancements in user interfaces through speech-to-text systems. Products like virtual assistants are becoming progressively refined in understanding and processing natural language thanks to advancements in neural network technologies. These systems improve user experience by offering more accurate responses and understanding user requests in a more human-like manner.

In all these applications, the underlying strength of neural networks is their ability to process and analyze data at a scale and speed that vastly exceeds human capabilities, yet with a growing approximation to human-like discernment.

Future Trends and Advancements in Neural Networks

As we look into the future of artificial intelligence, neural networks are poised for groundbreaking advancements that will further integrate them into daily life and expand their capabilities into new domains. These transformations are anticipated in areas of hardware enhancements, algorithmic refinements, and expansive application spectrums.

One compelling evolution lies in hardware innovations, specifically the development of specialized processors such as neuromorphic chips. These chips are designed to mimic the neural architectures of the human brain, processing information more efficiently than traditional hardware. This promises faster processing times, reduced energy consumption, and more sophisticated cognition capabilities akin to natural neural processing.

Algorithmic improvements also promise to expand the capabilities of neural networks, giving them a better foundation for learning complex patterns with greater accuracy and less human intervention. Advances in unsupervised learning algorithms could dramatically enhance machine's ability to process and understand unlabelled data, opening up new possibilities for AI to generate insights without explicit programming. Improvements in transfer learning, which allows models developed for one task to be reused for another related task, are poised to reduce the resources required for training data-hungry models.

Beyond traditional domains, the scope of applications for neural networks is set to broaden dramatically. With growing integration in IoT devices and smart city projects, neural networks could soon manage everything from traffic systems and energy networks to public safety and environmental monitoring. In the workplace, neural networks might soon be found facilitating seamless human-machine collaboration—augmenting human roles rather than replacing them.

Developments in quantum neural networks—a fusion of quantum computing and neural networks—could reshape computational paradigms. These networks would leverage the principles of quantum mechanics to process information at speeds and volumes beyond the reach of current AI technologies. This could profoundly accelerate the solving of complex optimization problems in fields like logistics, finance, and materials science3.

Crucial to all these developments will be the ethical implementation of such technology. As neural networks become more integral to critical applications, ensuring they are developed and deployed in ways that preserve privacy, security, and fairness will become ever more vital.

The future of neural networks promises technological evolution and a deeper integration into the fabric of society. With each breakthrough in hardware and improvements in algorithms, paired with expanding applications areas, neural networks are set to transition from novel computational tools to foundational elements driving the next stage of intelligent automation and human-machine collaboration.

Revolutionize your content with Writio, the AI content writer. This article was written by Writio.

- Shen D, Wu G, Suk HI. Deep learning in medical image analysis. Annu Rev Biomed Eng. 2017;19:221-248.

- Ozbayoglu AM, Gudelek MU, Sezer OB. Deep learning for financial applications: A survey. Applied Soft Computing. 2020;93:106384.

- Schuld M, Sinayskiy I, Petruccione F. An introduction to quantum machine learning. Contemporary Physics. 2015;56(2):172-185.